How to Create Custom ML Models with Google MediaPipe Model Maker

2024-12-09 | By Maker.io Staff

Google’s MediaPipe SDK is a powerful, streamlined machine-learning library that supports various standard tasks such as landmark tracking, image classification, and object detection. Its pre-trained models can significantly accelerate development and debugging. However, it may be necessary to tailor the standard models to work in new settings or to derive more specific models. This article explores the fundamentals of transfer learning and demonstrates how to use Google’s MediaPipe Model Maker to customize the standard object classifier model for new datasets.

Understanding Transfer Learning

Before delving into transfer learning, newcomers should familiarize themselves with machine-learning basics and standard terminology to ensure they can follow the discussion effortlessly.

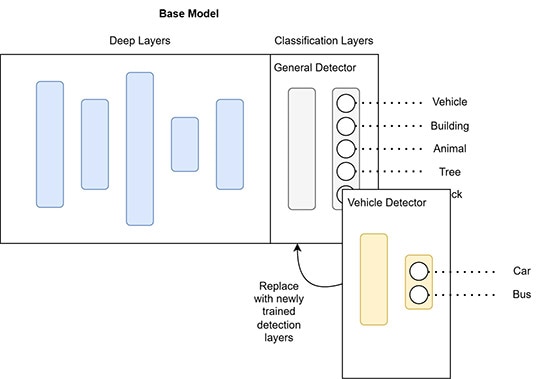

Transfer learning is a technique that does not build a new model from scratch. Instead, it replaces some of the topmost layers of an existing model while keeping the deeper layers unaltered, thus transferring the existing knowledge encoded in a model’s deeper layers to a more focused, streamlined, and smaller model.

Transfer learning retains the base model’s knowledge in the deeper layers but replaces the top classification layers to build a more focused derived version of the original.

Transfer learning offers multiple benefits, with the most apparent one being the significant reduction in training time and computation effort required to build a model, which results from only having to adjust the upper layers of the model. Further, developers can also retrain an existing model using less data, often requiring only a few hundred examples per class.

However, transfer learning also comes with some challenges. The main drawback is that the re-trained model can only become more specific or specialized, not more general. Suppose the base model can detect everyday items, such as buildings, trees, people, and vehicles. A more refined model could detect specific types of vehicles, such as buses, cars, and bicycles. However, it would lose the ability to detect buildings, trees, and humans. While the model has become more specific in a particular area, it loses its ability to detect the other object classes unless re-trained with all of them. Therefore, developers must carefully consider the model they want to use as the basis, as selecting a suitable model is vital for the process's success.

This article discusses re-training Google’s base object detector. However, different models exist for standard tasks, such as hand landmark detection or image classification, and you must adjust the base model accordingly.

Prerequisites for Local Training

The MediaPipe model maker requires the installed Python version to be between 3.9 and 3.12 and pip version 20.3 or above. It’s best that you follow this guide for managing multiple Python installations if they need to use a version different from their system-wide installation. The MediaPipe model maker can be installed using pip:

Copy Code

pip install --upgrade pip

pip install mediapipe-model-maker

However, it’s important to note that installing the model maker is challenging and may involve lots of debugging and manual configuration, especially on Windows computers. Therefore, it’s recommended that beginners use a managed environment that already provides the necessary prerequisites, such as a Google Colab Notebook.

Preparing the Training Data

Unfortunately, the exact steps for preparing the training data differ based on the model that serves as the transfer learning base. This article uses the COCO standard to prepare data for re-training Google’s MobileNET V2 object classifier. However, the official MediaPipe documentation contains instructions on preparing the training examples for the other models.

The object detector base model requires developers to supply the training examples using the COCO or PASCAL VOC format. These formats contain the training images in a pre-defined folder structure and additional metadata in a JSON or XML file. The extra metadata describes the object classes (labels) and what each of the training images represents.

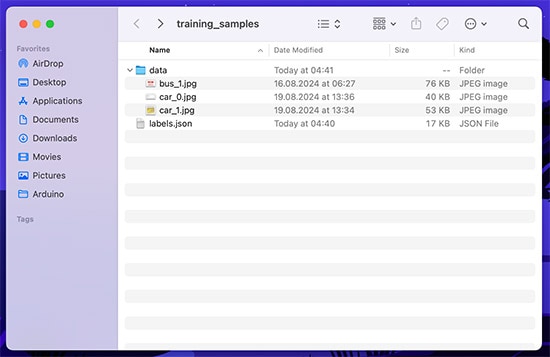

The COCO format dictates that the training images must be placed in a base folder called data, and the JSON file (called labels.json) needs to be located in the same parent directory as the data folder:

Arrange the examples and supplementary JSON file as shown in this example screenshot.

The supplementary labels.json file contains a list of all labels to train (e.g., car, bicycle, bus), and it assigns each image and label a unique ID. Finally, this file links each image to a label, thus describing what the image represents. This description also includes a bounding box around the area that contains the example within an image. For example:

Copy Code

{

"categories":[

{"id":0, "name":"car"},

{"id":1, "name":"bus"},

...

],

"images":[

{"id":0, "file_name":"car_0.jpg"},

{"id":1, "file_name":"car_1.jpg"},

…

{"id":100, "file_name":"bus_1.jpg"},

{"id":101, "file_name":"bus_2.jpg"},

…

],

"annotations":[

{"id":0, "image_id":0, "category_id":0, "bbox":0, 0, 192, 65]},

…

{"id":100, "image_id":100, "category_id":1, "bbox":0, 0, 256, 192]},

]

}

Note that it’s possible to have separate COCO folders for the train, test, and validation data, each with distinct labels.json files.

Perform Transfer Learning with MediaPipe Model Maker

Once everything is set up and the data is in the required format, you can retrain an existing model to create a focused or slimmed-down version in only a few lines of code with MediaPipe model maker:

Copy Code

import os

import json

import tensorflow as tf

from mediapipe_model_maker import object_detector

from mediapipe_model_maker import quantization

dataset_path = "sample_data/"

data = object_detector.Dataset.from_coco_folder(dataset_path)

train_data, remaining_data = data.split(0.8)

test_data, validation_data = remaining_data.split(0.5)

spec = object_detector.SupportedModels.MOBILENET_V2

hparams = object_detector.HParams(export_dir='exported_model', epochs=5)

options = object_detector.ObjectDetectorOptions(

supported_model=spec,

hparams=hparams

)

model = object_detector.ObjectDetector.create(

train_data=train_data,

validation_data=validation_data,

options=options)

The first few lines import the necessary libraries. Then, the dataset_path contains the relative path to the COCO folder described above. The object_detector loads the dataset from the specified path before splitting it into training, test, and validation sets. Loading separate COCO folders for some or all of these subsets is also possible.

After splitting the data, the script specifies the hyperparameters for transfer learning. In this example, the script states that Mobilenet V2 is the base model for re-training, and it further defines where the exported model should be stored and the number of epochs.

This image shows the training process.

The subsequent line performs re-training and stores the result in the model object. This call creates a complete float32-based model. The resulting model may be overly complex for the target platform. Therefore, the model maker supports model quantization, a technique that can reduce a model’s size and improve inference speed with only a minor decrease in accuracy. It’s recommended that GPU-based systems use float32 or float16 and that CPU-only systems and embedded platforms work with a lightweight int8-based model.

Building an int8-version requires quantization-aware training (QAT) after creating the primary model:

Copy Code

# Create uint8 model with quantization-aware training

hyperparams = object_detector.QATHParams(learning_rate=0.9, batch_size=4, epochs=5, decay_steps=5, decay_rate=0.96)

model.restore_float_ckpt()

model.quantization_aware_training(train_data, validation_data, qat_hparams=hyperparams)

model.export_model('model_int8.tflite')

Developers can choose different hyperparameters during this step. In either case, it’s essential to call the model’s restore_float_ckpt function before QAT to restore the model to float32 precision before proceeding, as quantization of an already reduced model results in errors.

Similarly, developers can derive a float16-based model from the main re-trained version after training concludes by performing post-training quantization on the full model:

Copy Code

# Or perform float16 quantization after regular model training

quantization_config = quantization.QuantizationConfig.for_float16()

model.restore_float_ckpt()

model.export_model(model_name="model_fp16.tflite", quantization_config=quantization_config)

Converting a float32 model to a float16-based version does not require QAT. However, developers must still include the restore_float_ckpt() call before performing quantization if they perform other forms of quantization in the program.

The exported TensorFlow-Lite (tflite) models can then be imported into a MediaPipe application, as described in another article.

Summary

Transfer learning is an ML technique that creates focused and streamlined versions of a more general base model by replacing the initial model’s upper detection layers with new ones. The approach reduces the required training examples and leverages the model’s preexisting stored knowledge hidden in its lower layers.

Google’s MediaPipe model maker is a tool for modifying models like Mobilenet V2 and adapting them for custom applications, such as making region-specific detectors. However, setting up the required development environment can be tricky, and you should use a pre-built runtime, such as Google Colab.

The data must be prepared for transfer learning with the model maker by providing the examples in a specific format. The exact requirements depend on the base model. This article discusses an example that utilizes the COCO format, where a supplementary JSON file describes each training image.

The transfer learning program itself only comprises a few lines of code. The script primarily loads the data before splitting it into training, validation, and test sets. It then retrains the existing model before performing quantization to reduce its complexity and improve inference speed without significant loss of precision. It’s recommended that GPU-based systems use float32 or float16. Embedded devices benefit from the reduced complexity of int8-based models.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

中国

中国