What is Data-Preparation in ML, And Why is it Crucial for Success?

2024-04-03 | By Maker.io Staff

This article explores how preprocessing prepares data for machine learning, tackles challenges like missing values and outliers, and helps ensure fair and accurate model results. Further, it explores vital techniques such as scaling, rebalancing, and variable transformation.

How Preprocessing Prepares Data for ML Projects

Data-Preparation, or preprocessing, is one of the most crucial steps that nearly every machine learning project must undergo to succeed. The process prepares raw data for analysis by addressing potential issues found in the samples by handling missing values, addressing outliers and inconsistencies, and encoding features into a suitable format for the algorithm. Besides ensuring data quality, preprocessing also reduces noise and often helps prevent or address issues such as overfitting. Finally, preprocessing is an important task when optimizing the performance of an algorithm, as the data-preparation step often helps reduce the dimensionality of the data by identifying the most relevant features.

Scaling, Normalization, and Standardization

Some algorithms, such as kNN (K-Nearest Neighbors) or SVMs (Support Vector Machines), rely on the distance between data samples and are thus sensitive to the magnitude of features. If the distances in the data are off, some instances may dominate the learning process, leading to poor model performance. Scaling features ensure that they contribute equally to the model's learning process, leading to fair and accurate results.

There are various methods engineers can employ to ensure fair scaling across the variables. However, min-max scaling is a popular and easy-to-understand method that transforms features to a range between zero and one, ensuring that the minimum value becomes zero, the maximum value becomes one, and all other values are proportionally scaled in between, depending on their original value.

In another popular approach, Z-score standardization, each variable is converted to have a mean of zero and a standard deviation of one. The method achieves this by subtracting the global mean of the variable from each value in the dataset and then dividing it by the variable's standard deviation.

How Oversampling and Undersampling Help Maintain Fairness

Datasets with significant disparities in class distribution (e.g., 90% positive instances, 10% negative) can cause certain classifiers, such as kNN, to ignore the minority class or overestimate the importance of positive samples. In such cases, the training set can be rebalanced using one of two methods. In undersampling, entries from the majority class are omitted, while in oversampling, samples from the minority class are duplicated to achieve a balanced representation of the classes.

Transforming Variables: One-Hot Encoding and Label-Encoding

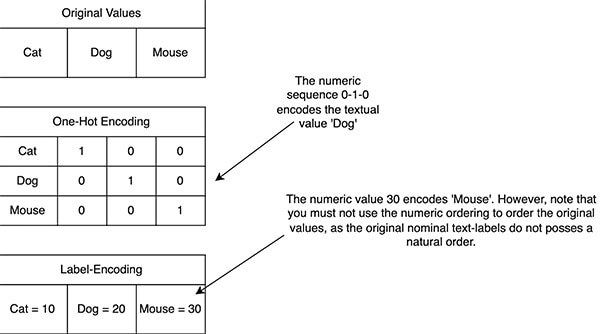

Some algorithms can only process numerical values. Therefore, some datasets require representing textual labels with numbers. This task can be achieved, for example, by introducing n new attributes — each representing one of the n old textual values. The new features have a value of 0 everywhere except for the exact position that represents the old label of the data point (one-hot encoding.)

Alternatively, the original labels can be assigned different numerical values. All data points with that label then receive the new numerical index of the label (label-encoding.)

One-hot encoding is generally better suited for nominal variables without an inherent order. However, label encoding is often a better choice for values that can be compared, as the resulting numbers can be chosen to reflect the original ordering.

One-hot encoding is generally better suited for nominal variables without an inherent order. However, label encoding is often a better choice for values that can be compared, as the resulting numbers can be chosen to reflect the original ordering.

Addressing Missing Values in ML Preprocessing

The simplest solution is to delete samples with missing values from the training dataset. However, this is not an option if the model later needs to handle missing values (e.g., during testing.)

Alternatively, one can introduce a custom N/A or null value, select a random value from another entry, or compute the average or nearest integer value. These imputation methods are only meaningful when the number of missing values is small and when the entries with missing data are too critical to be deleted.

How to Apply Preprocessing to Prevent Data Leakage

Data leakage describes a problem where information from the training set leaks into the test set, which must never happen. Therefore, the original dataset must always be divided first (e.g., using holdout or cross-validation) before applying any preprocessing steps. Finally, these preprocessing steps should only be applied to the test dataset rather than the training data. The test sample must always remain unaltered to simulate realistic conditions when evaluating the model’s performance later.

Summary

Preprocessing is a critical step in machine learning projects that prepares data for analysis and ensures the project's success. It involves addressing issues like missing values, outliers, and inconsistencies and encoding features in a suitable format for the algorithm. Preprocessing enhances data quality, reduces noise, and helps prevent overfitting.

Scaling techniques like min-max scaling ensure that features contribute equally to the learning process by distributing all values between zero and one. At the same time, Z-score standardization converts variables to have a mean of zero and a standard deviation of one.

Rebalancing techniques like oversampling and undersampling can address class imbalances in the data. Transforming variables through one-hot encoding and label encoding enables the processing of textual data in algorithms that can only handle numbers. Handling missing values can involve deletion, imputation, or assigning custom values.

Finally, dividing the original dataset before preprocessing is crucial to prevent data leakage, where information from the training set influences the test set. The test set should remain unchanged for a realistic evaluation of the model's performance.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

中国

中国