Common Problems and Pitfalls with Multi-Thread Programming

2022-05-16 | By Maker.io Staff

When introducing multi-threaded processing into applications, programmers have to pay attention to a plethora of possible problems that commonly occur in concurrent computer programs. For example, programmers may want to establish an order in which these concurrent tasks may run on the CPU. In addition, they may want to limit how many processes may access shared variables and files to prevent data-consistency problems.

Read below to learn more about a few common issues in concurrent programs.

The Main Problems in Multi-Threaded Programming

Typically, the first step in developing a concurrent algorithm is to break down the original problem into smaller chunks that a thread can solve as independently from the other threads as possible.

However, in reality, the threads and processes of a parallel program compete for the limited shared resources of a computer. In addition, some resources are even more scarce: for example, a single file on a hard disk. While all threads can read a file without worrying about data consistency, only a single thread can write to the file. In addition, many algorithms have a strict, predetermined order in which the threads must execute the involved steps. When a program contains multiple threads, some of them may need to wait for the other threads to finish before they can proceed to work on the next step of the algorithm.

Therefore, the two main problems in multi-threaded programming are managing the concurrent access problem and ensuring that all threads execute a given sequence in the correct order.

Why Programmers Need to Worry About Synchronization in Threads

As mentioned in the last article, there’s no initial guarantee that the operating system’s scheduler executes the threads of a user program in any given order. The OS might pause certain threads mid-execution and resume them later, for example, due to a callback event that requires the attention of the system software. That article also mentioned that all threads within a process share the same heap memory region. Therefore, all threads can access and modify global program variables at any time and in any order. As practical as this approach is for exchanging data between threads, the shared memory region can quickly become a problem when not managed correctly.

As a simple example, suppose that your bank uses an incorrectly implemented payment algorithm. You order a product online, and the company charges your account as soon as they ship your item. Unfortunately for the bank, this happens while you’re out getting groceries. As you swipe your card through the check-out terminal, the local store’s cash register obtains a copy of your account balance from the bank. Just at that moment, the online retailer requests payment from your bank, which successfully goes through. However, the local retailer’s machine is a bit slower, and it takes a few seconds to process your payment. Once done, the grocery stores checkout terminal pushes the payment request to the bank using the old balance it obtained earlier. By doing so, the terminal overwrites the transaction submitted by the online retailer just a few seconds ago.

This flowchart illustrates the bank example from above. Both concurrent processes (the online retailer and the grocery store terminal) obtain a copy of the account balance from the bank. However, the store terminal overwrites the correct result of the online retailer’s transaction, which yields an incorrect balance in the bank account.

This flowchart illustrates the bank example from above. Both concurrent processes (the online retailer and the grocery store terminal) obtain a copy of the account balance from the bank. However, the store terminal overwrites the correct result of the online retailer’s transaction, which yields an incorrect balance in the bank account.

Methods for Establishing Synchronization and Consistency in Programs

Any mechanism that protects an area of shared memory from getting modified by two different threads simultaneously is called a synchronization mechanism. Note that this description is not limited to the shared memory space of two threads within the same process. Instead, it also applies to any other shared memory region, for example, a text file on the HDD. When such a mechanism is not in place, threads may overwrite or corrupt calculation results from other threads, leading to incorrect results. In general, two broad concepts can help programmers achieve various levels of consistency in concurrent applications.

Condition synchronization ensures that two or more threads obey a specific order when running code. Programmers can, for example, define that thread A must complete task X always before thread B executes task Y.

Mutual exclusion (mutex) can ensure data consistency by enforcing that only a single thread can enter a critical region of a program at any given time. Therefore, mutex helps programmers avoid data-consistency issues such as the one discussed in the hypothetical bank example.

What Is a Deadlock in a Concurrent Program?

Taking care of race conditions and synchronization problems can potentially introduce deadlocks into a program if developers don’t pay attention when using condition synchronization and mutual exclusion. A deadlock happens when two or more concurrent threads or processes block each other from progressing, typically because they wait for a circular dependency that never gets resolved.

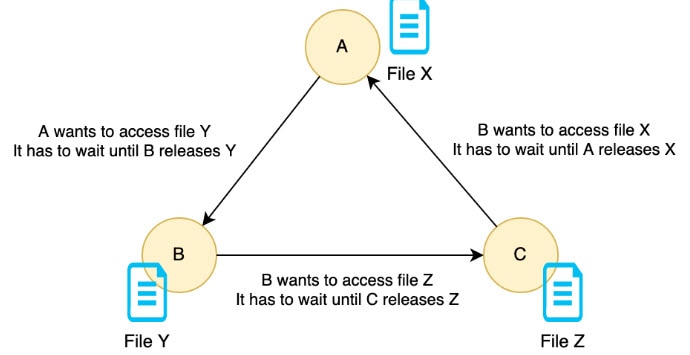

In this example, thread A holds the access rights to file X, thread B locks file Y, and thread C uses file Z. None of the threads can progress until one of the other threads releases the file it currently holds. However, that won’t happen until either of the threads makes progress. So none of the threads can progress, and they block each other eternally.

In this example, thread A holds the access rights to file X, thread B locks file Y, and thread C uses file Z. None of the threads can progress until one of the other threads releases the file it currently holds. However, that won’t happen until either of the threads makes progress. So none of the threads can progress, and they block each other eternally.

Imagine the following scenario: You’re in the cereal aisle of a grocery store when you see someone standing right in front of the cereal you want to buy. So you decide to wait for them to leave, and you look at other boxes on the shelf while you wait. However, you don’t know that the other person is waiting for you to put down the package you’re looking at right now. So neither of you makes any progress as you both wait for each other to put down the box and leave. This scenario is a very simple example of a deadlock.

Unfortunately, there’s no universal solution for this dilemma, and there are multiple ways to resolve the problem. However, in concurrent programming, it’s usually best to avoid potential deadlocks at all costs. It’s typically up to the operating system to recover deadlock. Still, programmers can implement custom mechanisms such as a watchdog thread that detects situations where none of the other threads makes any progress for a certain period. The watchdog thread can then restart the threads if necessary.

Summary

Threads share some resources, and they run on the same hardware. Therefore, the operating system must schedule the threads to guarantee that each of the threads can run at one point. However, there’s no guarantee that threads execute code in any given order. If your algorithm relies on the threads adhering to a particular order of execution, you must ensure condition synchronization.

Similarly, accessing data using different threads can quickly become a problem. Typically, reading data is not a problem. However, as soon as threads start writing information to a shared resource or memory location (for example, a file on the HDD) programmers must ensure that only one thread can access the critical resource at any given time. Otherwise, threads might overwrite data written by other threads and corrupt the result.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.