An Introduction to Multi-Threading C Programs

2022-05-09 | By Maker.io Staff

Some algorithms and programs take a long time to finish, and they could potentially benefit from parallelization. However, writing parallel programs is not an easy task, and it takes practice and special techniques. Programmers have to pay close attention to how their programs manage concurrent data access and the lifecycle of multiple threads. Therefore, this article presents a short overview of POSIX threads in C, and it demonstrates how you can leverage parallelization to speed up long-running algorithms in your C programs.

What are Multi-Threaded Programs?

You can employ threads or processes to introduce parallelism into computer applications. When using processes, each process runs independently from the others, either on the same physical machine or on a network of distributed computers. Here, the processes need to exchange messages that contain data such as remote procedure calls, calculation results, and synchronization messages. A second method utilizes threads that a single computer executes as parts of a more complex program.

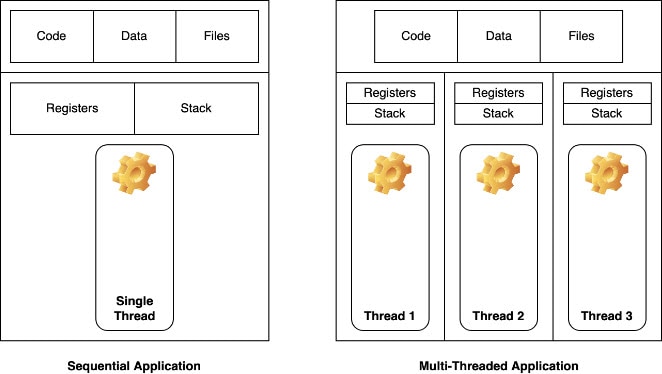

There are various benefits and disadvantages to either method, and there’s no single one-size-fits-all solution. However, in general, threads are more lightweight units compared to processes. Each thread has its own stack region in memory and executes code independently of other threads. However, threads are not entirely isolated units. Instead, all threads within a process share the same heap memory region, so all threads of a process can access the same global variables and filehandles.

This image illustrates the relationship between multiple threads of the same process.

This image illustrates the relationship between multiple threads of the same process.

Lastly, it’s important to remember that it’s a computer’s operating system that schedules threads and determines when they may run on the CPU. As threads are smaller parts of a process, the OS can switch between them faster than it could swap processes. In addition, keep in mind that there’s no guarantee that the operating system’s scheduler will let the threads run in any given order, nor that they are actually going to run in parallel.

It’s also important to remember that you can only achieve true parallelism on multi-core CPUs. However, that doesn’t mean that a single-thread system can’t benefit from threads.

The Benefits of Using Multiple Threads in a Program

Many computer programs access external data - for example, a file on a hard disk or cloud storage server. Doing so might take a few seconds, and a strictly non-parallel program would block at this point. However, if the program uses a separate thread for reading the file, the rest of the program (like a graphical UI) can keep responding to user input.

In addition, you can often achieve a significant speed-up in programs that are easy to parallelize. Not all algorithms benefit from parallelization and adding unnecessary parallelism can sometimes slow programs down. Switching between threads and processes takes some time, and too many threads might create overhead for the OS. However, threads are perfect for algorithms that can easily be parallelized, for example, merge sort. Here, each thread can operate on a single part of the array the program has to sort, and the threads won’t get in each other’s way. You do have to be careful whenever threads operate on the same data set, as they can easily overwrite or corrupt the data written by another thread.

Modern Computing Wouldn’t be the Same Without Threads

Threads are a great way to ensure that an application remains responsive even when the program makes blocking calls, like a file access operation. Another example includes web servers, which handle multiple client requests simultaneously. Without threads, a server could reply to only one request at a time. Whenever a user visits a web page, the server would have to execute all necessary tasks and then send the reply to the user. Doing so might take several seconds, depending on the complexity of the website. During this time, the server would have to put all other incoming requests into a queue to handle them later.

Imagine how long that queue would become for popular websites and how long you’d have to wait for the website to load if the server could only handle one request at a time! Instead, the server can generate a new thread or re-use an existing one from a pool for each incoming request to serve all users concurrently.

Writing a Simple POSIX-Thread Hello World Program

As mentioned, this article only discusses POSIX threads, which you can use on Unix-like operating systems. However, the basic principles apply to all thread implementations, programming languages, and operating systems. Consider the following example:

Copy Code

/* Include statements omitted */

#define NUM_THREADS 8

#define MAX_LENGTH 40000

#define CHUNK_SIZE (MAX_LENGTH / NUM_THREADS) * 2

/* Function prototypes and struct definition omitted */

void* createThread(void *args)

{

struct arg_struct *vals = args;

longRunningTask(vals->start, vals->end, MAX_LENGTH / NUM_THREADS);

pthread_exit(NULL);

}

void longRunningTask(unsigned start, unsigned end, unsigned chunk)

{

unsigned* matrix = malloc(chunk * chunk * sizeof(unsigned));

if(matrix == NULL)

return;

int index = 0;

for(int i = start; i < end; i++)

{

for(int u = start; u < end; u++)

matrix[index++] = i * u;

}

free(matrix);

}

int main(int argc, char* argv[])

{

/* Print statements and time measurement omitted */

/* Sequential execution */

longRunningTask(0, MAX_LENGTH, MAX_LENGTH * MAX_LENGTH);

/* Parallel execution */

pthread_t threads[NUM_THREADS];

for(int i = 0; i < NUM_THREADS; i++)

{

int start = i * CHUNK_SIZE;

int end = (start + CHUNK_SIZE) - 1;

struct arg_struct thread_args;

thread_args.start = start;

thread_args.end = end;

thread_args.threadID = i;

int rc = pthread_create(&threads[i], NULL, createThread, (void*)&thread_args);

if (rc)

{

return -1;

}

}

for(int i = 0; i < NUM_THREADS; i++)

{

pthread_join(threads[i], NULL);

}

return 0;

}

The code above builds a large matrix of unsigned integer values. In the presented configuration, the matrix contains 1.6 billion entries. The longRunningTask-function implements the matrix-building procedure. When creating the entire matrix, the method first allocates enough memory to store all elements in the matrix. It then iterates over all entries and performs a simple multiplication. The function then places the result in the matrix. Note that the method contains three parameters that help the parallel threads break down the matrix calculation into smaller computational chunks.

The sequential task performs 40,000 x 40,000 calculations, which takes a while. Meanwhile, each parallel-running thread only has to perform 20,000 x 20,000 computations. The threads don’t only have to complete less work per thread, but they also do it in parallel.

The sequential task performs 40,000 x 40,000 calculations, which takes a while. Meanwhile, each parallel-running thread only has to perform 20,000 x 20,000 computations. The threads don’t only have to complete less work per thread, but they also do it in parallel.

The main function is what starts and times both the sequential computation and the parallel threads. The sequential approach doesn’t split the matrix into smaller sub-matrices. Instead, it starts at index zero and ends at the maximum defined number of entries. However, once the sequential computation call returns, the main function generates several threads (eight in this example). The first for-loop in the main function creates the new threads. Here, I first define where each thread’s sub-matrix starts and ends. The threads split the huge matrix into smaller, more manageable parts. Then, each thread executes the createThread function that calls the longRunningTask method with its custom start and end parameters. Once a thread returns from the longRunningTask, the last line in the createThread function destroys that thread.

Lastly, note the second for-loop in the main method. This loop makes the main program wait for all threads to finish before the program stops. Furthermore, note that the program discards all results from all computations. In a real-world scenario, you’d have to assemble the sub-matrices to a larger one in order to get the correct result. When I run this program on my computer, the execution time dramatically differs between the parallel and the sequential executions:

This image shows the output of the example program above. Note how the sequential calculation takes around four seconds to finish, while the multi-threaded execution only requires a little over two and a half seconds.

This image shows the output of the example program above. Note how the sequential calculation takes around four seconds to finish, while the multi-threaded execution only requires a little over two and a half seconds.

Summary

Threads are extremely useful in modern software development, as they help a system remain responsive even when it executes long-running tasks. Multi-tasking requires an operating system that can schedule tasks on a shared CPU. Threads are more lightweight than processes, and the OS can create, destroy, and switch between threads quickly. Each thread in a process executes its own code, stack, and registers. However, all the threads of a process share the same heap memory region and other data such as file handles.

Note that not every algorithm and use case benefits from multi-threading and parallelization. However, you can convert many algorithms to function with multiple threads. Here, you must pay close attention to synchronization and locking, as threads can easily overwrite data created by other threads, which might not be desirable. In addition, the main application should wait for all threads to finish before further processing the result.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.