How to Perform Object Detection with TensorFlow Lite on Raspberry Pi

2020-10-19 | By ShawnHymel

License: Attribution Raspberry Pi

Object detection is a difficult problem in the field of computer vision. It requires computers to look at an image (or individual frame from a video stream), identify any objects of interest, and then classify each object. This technique is useful for tracking people at a crosswalk, tracking faces for facial recognition, looking for obstacles in a self-driving car, and helping robots identify objects it can manipulate.

In this tutorial, I’ll walk you through the process of installing TensorFlow Lite on a Raspberry Pi and using it to perform object detection with a pre-trained Single Shot MultiBox Detector (SSD) model.

You can watch this tutorial in video form here:

Required Hardware

You will need the following hardware to complete this tutorial:

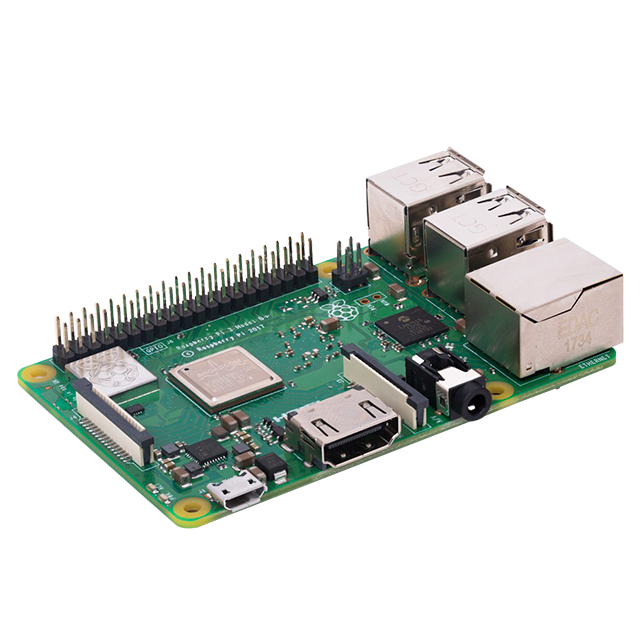

- Raspberry Pi 3B+, Raspberry Pi 4B (4 GB), or Raspberry Pi 4B (8 GB)

- Raspberry Pi Camera V2

- Keyboard, mouse, monitor to initially configure the Pi (you can use VNC later, if you wish)

Prepare Raspberry Pi

For this tutorial, you can use a Raspberry Pi 3B+ or Raspberry Pi 4 (4 or 8 GB model). While either will work, object detection runs much faster on the Pi 4, as it has a faster processor and more memory. In my experience, the TensorFlow Lite (TFLite) model used in this tutorial will give you about 1 frame per second (fps) on the Pi 3 and about 5 fps on the Pi 4.

Connect a Raspberry Pi camera to the Raspberry Pi’s camera slot (ZIF connector in the middle of the board). Note that I used a Pi camera V2 for this demo, but a V1 camera or USB webcam should also work.

Follow this guide to install the latest version of Raspbian onto a microSD card. When you boot the Pi, go through the steps to configure the operating system (use the command raspi-config if you do not get an initial pop-up window). Specifically, you will want the following:

- Change the default password

- Keyboard and language set to your region

- Internet connection

- Enable camera interface (if you’re using the Pi camera)

- (Optional) enable SSH and VNC if you want to work on your Pi remotely

See this guide on using raspi-config.

Install TensorFlow Lite

Open a new terminal. As Raspbian defaults to Python 2 (at the time of this tutorial’s writing) and we need to use Python 3, I like to set the commands python and pip to use version 3 by default. To do that, open a new terminal and edit .bashrc:

cd ~

nano .bashrc

At the bottom of that document, add the following lines:

alias python=python3

alias pip=pip3

Save and exit the document (ctrl-x). Force a reload using the source command so you don’t need to reload the terminal:

source .bashrc

Let’s create a virtual environment so we can keep installed libraries and packages separate from our main terminal environment. TensorFlow can require very specific versions of libraries, so a virtual environment helps to keep things separate from the rest of your development work. We’ll do that by installing virtualenv and creating a tflite environment in our project directory. Enter the following:

mkdir -p Projects/Python/tflite

cd Projects/Python/tflite

python -m pip install virtualenv

python -m venv tflite-env

Now, we should have a virtual environment created in our tflite directory. Each time we wish to execute a TensorFlow Lite program, we must first activate the tflite-env virtual environment, as that’s where all of our packages and libraries are stored. To do that, run the activate script in our environment’s directory with:

source tflite-env/bin/activate

Your prompt should change to have a (tflite-env) preceding the directory listing. This will let you know that you are working in a virtual environment.

In this new virtual environment, install the following libraries, which assist OpenCV in processing images/videos:

sudo apt -y install libjpeg-dev libtiff5-dev libjasper-dev libpng12-dev libavcodec-dev libavformat-dev libswscale-dev libv4l-dev libxvidcore-dev libx264-dev

Next, we need to install a few libraries to help with the OpenCV and TensorFlow Lite backend and GUI processing:

sudo apt -y install qt4-dev-tools libatlas-base-dev libhdf5-103

When those libraries have finished installing, use pip to install OpenCV. Specifically, we want v4.1.0.25, as that seems to work best for now:

python -m pip install opencv-contrib-python==4.1.0.25

Then, enter the following to figure out what type of processor you have and which version of Python you are using:

uname -m

python --version

On a Raspberry Pi 3 or 4, you should see something telling us the CPU is an “armv7l.” For me, Python is version 3.7.

“armv7l” is a 32-bit ARM processor, which we’ll need to know for the next part. At the time of this writing, TensorFlow Lite will work with Python versions 3.5-3.8. If you do not have one of these versions, follow these instructions to install a different version of Python in your virtual environment.

Open an Internet browser on your Pi and head to tensorflow.org/lite/guide/python. Scroll down to the list of wheel (.whl) files and find the group that matches your OS/processor, which should be “Linux (ARM 32).” In that group, find the link that corresponds to your version of Python (3.7 for me). Right-click and select “Copy link address.”

Back in the terminal, enter the following:

python -m pip install <paste in .whl link>

Paste in the link and press ‘enter.’ This should install TensorFlow Lite in your virtual environment. Note that for this tutorial, I installed TensorFlow Lite v2.1. This will take some time to install.

Download Pre-Trained Model

Training a machine learning model from scratch can be a time-consuming process. We will cover training a model for object detection in a future tutorial. For now, let’s start with a simple model that has been trained for us on the COCO dataset.

Common Objects in Context (COCO) is a large collection of images that have been tagged and labeled by researchers at Microsoft, Facebook, and a variety of universities. It contains over 200,000 images with around 90 object categories.

Object detection or object classification models can be trained on the COCO dataset to give us a starting point for recognizing everyday objects, such as people, cars, bicycles, cups, scissors, dogs, cats, and so on. We will use a MobileNet V1 model that has been trained on the COCO dataset as our object detection model.

On your Raspberry Pi, head to https://www.tensorflow.org/lite/models/object_detection/overview and download the Starter model and labels zip file. Note that if the link on that site does not work, you can download the same zip file here:

Download Starter Model and Labels

In a terminal, let’s move the .zip file to a working directory for our project and unzip it:

mkdir -p ~/Projects/Python/tflite/object_detection/coco_ssd_mobilenet_v1

cd ~/Projects/Python/tflite/object_detection

mv ~/Downloads/coco_ssd_mobilenet_v1_1.0_quant_2018_06_29.zip .

unzip coco_ssd_mobilenet_v1_1.0_quant_2018_06_29.zip -d coco_ssd_mobilenet_v1

You should see two files in the coco_ssd_mobilenet_v1 directory: detect.tflite and labelmap.txt. The .tflite file is our model. You can use a program like Netron to view the neural network. Notice that the input of the model is a 300x300x3 array (or, more accurately, a “tensor”).

The labelmap.txt file contains an ordered list of all the possible categories. These line up with the COCO categories that were used to train the accompanying MobileNet model.

Run Object Detection

GitHub user EdjeElectronics has a great Python program for object detection that we will use as a starting point. You can view the original program here: TFLite_detection_webcam.py.

The program captures a frame from the camera using OpenCV, resizes the frame to 300x300 pixels (note that aspect ratio is not maintained), and passes the resulting tensor to TensorFlow Lite. The invoke() function returns with a list of detected objects in the frame, a confidence score of each object, and coordinates for their bounding boxes. The program takes these coordinates and draws a green rectangle around the object before displaying it to the user.

We are going to make a few additions. First, we will use cv2.WINDOW_NORMAL to create a window that can be resized. Second, we will add a section that computes the center of each object and lists detected objects to the console.

In a new text editor, paste the following code, which is EdjeElectronics' original program with our additions:

######## Webcam Object Detection Using Tensorflow-trained Classifier #########

#

# Author: Evan Juras

# Date: 10/27/19

# Description:

# This program uses a TensorFlow Lite model to perform object detection on a live webcam

# feed. It draws boxes and scores around the objects of interest in each frame from the

# webcam. To improve FPS, the webcam object runs in a separate thread from the main program.

# This script will work with either a Picamera or regular USB webcam.

#

# This code is based off the TensorFlow Lite image classification example at:

# https://github.com/tensorflow/tensorflow/blob/master/tensorflow/lite/examples/python/label_image.py

#

# I added my own method of drawing boxes and labels using OpenCV.

#

# Modified by: Shawn Hymel

# Date: 09/22/20

# Description:

# Added ability to resize cv2 window and added center dot coordinates of each detected object.

# Objects and center coordinates are printed to console.

# Import packages

import os

import argparse

import cv2

import numpy as np

import sys

import time

from threading import Thread

import importlib.util

# Define VideoStream class to handle streaming of video from webcam in separate processing thread

# Source - Adrian Rosebrock, PyImageSearch: https://www.pyimagesearch.com/2015/12/28/increasing-raspberry-pi-fps-with-python-and-opencv/

class VideoStream:

"""Camera object that controls video streaming from the Picamera"""

def __init__(self,resolution=(640,480),framerate=30):

# Initialize the PiCamera and the camera image stream

self.stream = cv2.VideoCapture(0)

ret = self.stream.set(cv2.CAP_PROP_FOURCC, cv2.VideoWriter_fourcc(*'MJPG'))

ret = self.stream.set(3,resolution[0])

ret = self.stream.set(4,resolution[1])

# Read first frame from the stream

(self.grabbed, self.frame) = self.stream.read()

# Variable to control when the camera is stopped

self.stopped = False

def start(self):

# Start the thread that reads frames from the video stream

Thread(target=self.update,args=()).start()

return self

def update(self):

# Keep looping indefinitely until the thread is stopped

while True:

# If the camera is stopped, stop the thread

if self.stopped:

# Close camera resources

self.stream.release()

return

# Otherwise, grab the next frame from the stream

(self.grabbed, self.frame) = self.stream.read()

def read(self):

# Return the most recent frame

return self.frame

def stop(self):

# Indicate that the camera and thread should be stopped

self.stopped = True

# Define and parse input arguments

parser = argparse.ArgumentParser()

parser.add_argument('--modeldir', help='Folder the .tflite file is located in',

required=True)

parser.add_argument('--graph', help='Name of the .tflite file, if different than detect.tflite',

default='detect.tflite')

parser.add_argument('--labels', help='Name of the labelmap file, if different than labelmap.txt',

default='labelmap.txt')

parser.add_argument('--threshold', help='Minimum confidence threshold for displaying detected objects',

default=0.5)

parser.add_argument('--resolution', help='Desired webcam resolution in WxH. If the webcam does not support the resolution entered, errors may occur.',

default='1280x720')

parser.add_argument('--edgetpu', help='Use Coral Edge TPU Accelerator to speed up detection',

action='store_true')

args = parser.parse_args()

MODEL_NAME = args.modeldir

GRAPH_NAME = args.graph

LABELMAP_NAME = args.labels

min_conf_threshold = float(args.threshold)

resW, resH = args.resolution.split('x')

imW, imH = int(resW), int(resH)

use_TPU = args.edgetpu

# Import TensorFlow libraries

# If tflite_runtime is installed, import interpreter from tflite_runtime, else import from regular tensorflow

# If using Coral Edge TPU, import the load_delegate library

pkg = importlib.util.find_spec('tflite_runtime')

if pkg:

from tflite_runtime.interpreter import Interpreter

if use_TPU:

from tflite_runtime.interpreter import load_delegate

else:

from tensorflow.lite.python.interpreter import Interpreter

if use_TPU:

from tensorflow.lite.python.interpreter import load_delegate

# If using Edge TPU, assign filename for Edge TPU model

if use_TPU:

# If user has specified the name of the .tflite file, use that name, otherwise use default 'edgetpu.tflite'

if (GRAPH_NAME == 'detect.tflite'):

GRAPH_NAME = 'edgetpu.tflite'

# Get path to current working directory

CWD_PATH = os.getcwd()

# Path to .tflite file, which contains the model that is used for object detection

PATH_TO_CKPT = os.path.join(CWD_PATH,MODEL_NAME,GRAPH_NAME)

# Path to label map file

PATH_TO_LABELS = os.path.join(CWD_PATH,MODEL_NAME,LABELMAP_NAME)

# Load the label map

with open(PATH_TO_LABELS, 'r') as f:

labels = [line.strip() for line in f.readlines()]

# Have to do a weird fix for label map if using the COCO "starter model" from

# https://www.tensorflow.org/lite/models/object_detection/overview

# First label is '???', which has to be removed.

if labels[0] == '???':

del(labels[0])

# Load the Tensorflow Lite model.

# If using Edge TPU, use special load_delegate argument

if use_TPU:

interpreter = Interpreter(model_path=PATH_TO_CKPT,

experimental_delegates=[load_delegate('libedgetpu.so.1.0')])

print(PATH_TO_CKPT)

else:

interpreter = Interpreter(model_path=PATH_TO_CKPT)

interpreter.allocate_tensors()

# Get model details

input_details = interpreter.get_input_details()

output_details = interpreter.get_output_details()

height = input_details[0]['shape'][1]

width = input_details[0]['shape'][2]

floating_model = (input_details[0]['dtype'] == np.float32)

input_mean = 127.5

input_std = 127.5

# Initialize frame rate calculation

frame_rate_calc = 1

freq = cv2.getTickFrequency()

# Initialize video stream

videostream = VideoStream(resolution=(imW,imH),framerate=30).start()

time.sleep(1)

# Create window

cv2.namedWindow('Object detector', cv2.WINDOW_NORMAL)

#for frame1 in camera.capture_continuous(rawCapture, format="bgr",use_video_port=True):

while True:

# Start timer (for calculating frame rate)

t1 = cv2.getTickCount()

# Grab frame from video stream

frame1 = videostream.read()

# Acquire frame and resize to expected shape [1xHxWx3]

frame = frame1.copy()

frame_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frame_resized = cv2.resize(frame_rgb, (width, height))

input_data = np.expand_dims(frame_resized, axis=0)

# Normalize pixel values if using a floating model (i.e. if model is non-quantized)

if floating_model:

input_data = (np.float32(input_data) - input_mean) / input_std

# Perform the actual detection by running the model with the image as input

interpreter.set_tensor(input_details[0]['index'],input_data)

interpreter.invoke()

# Retrieve detection results

boxes = interpreter.get_tensor(output_details[0]['index'])[0] # Bounding box coordinates of detected objects

classes = interpreter.get_tensor(output_details[1]['index'])[0] # Class index of detected objects

scores = interpreter.get_tensor(output_details[2]['index'])[0] # Confidence of detected objects

#num = interpreter.get_tensor(output_details[3]['index'])[0] # Total number of detected objects (inaccurate and not needed)

# Loop over all detections and draw detection box if confidence is above minimum threshold

for i in range(len(scores)):

if ((scores[i] > min_conf_threshold) and (scores[i] <= 1.0)):

# Get bounding box coordinates and draw box

# Interpreter can return coordinates that are outside of image dimensions, need to force them to be within image using max() and min()

ymin = int(max(1,(boxes[i][0] * imH)))

xmin = int(max(1,(boxes[i][1] * imW)))

ymax = int(min(imH,(boxes[i][2] * imH)))

xmax = int(min(imW,(boxes[i][3] * imW)))

cv2.rectangle(frame, (xmin,ymin), (xmax,ymax), (10, 255, 0), 2)

# Draw label

object_name = labels[int(classes[i])] # Look up object name from "labels" array using class index

label = '%s: %d%%' % (object_name, int(scores[i]*100)) # Example: 'person: 72%'

labelSize, baseLine = cv2.getTextSize(label, cv2.FONT_HERSHEY_SIMPLEX, 0.7, 2) # Get font size

label_ymin = max(ymin, labelSize[1] + 10) # Make sure not to draw label too close to top of window

cv2.rectangle(frame, (xmin, label_ymin-labelSize[1]-10), (xmin+labelSize[0], label_ymin+baseLine-10), (255, 255, 255), cv2.FILLED) # Draw white box to put label text in

cv2.putText(frame, label, (xmin, label_ymin-7), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 0), 2) # Draw label text

# Draw circle in center

xcenter = xmin + (int(round((xmax - xmin) / 2)))

ycenter = ymin + (int(round((ymax - ymin) / 2)))

cv2.circle(frame, (xcenter, ycenter), 5, (0,0,255), thickness=-1)

# Print info

print('Object ' + str(i) + ': ' + object_name + ' at (' + str(xcenter) + ', ' + str(ycenter) + ')')

# Draw framerate in corner of frame

cv2.putText(frame,'FPS: {0:.2f}'.format(frame_rate_calc),(30,50),cv2.FONT_HERSHEY_SIMPLEX,1,(255,255,0),2,cv2.LINE_AA)

# All the results have been drawn on the frame, so it's time to display it.

cv2.imshow('Object detector', frame)

# Calculate framerate

t2 = cv2.getTickCount()

time1 = (t2-t1)/freq

frame_rate_calc= 1/time1

# Press 'q' to quit

if cv2.waitKey(1) == ord('q'):

break

# Clean up

cv2.destroyAllWindows()

videostream.stop()

Save it as TFLite_detection_webcam.py in the ~/Projects/Python/tflite/object_detection folder. In a terminal window, activate the tflite-env virtual environment (if it’s not already activated) and run the program, setting the modeldir parameter to the location of your .tflite and labelmap files.

cd ~/Projects/Python/tflite

source tflite-env/bin/activate

cd object_detection

python TFLite_detection_webcam.py --modeldir=coco_ssd_mobilenet_v1

After a moment, you should see a new window pop up, giving you a feed of the Pi camera or webcam. If it seems a little blurry, you can use a pair of needle nose pliers to carefully rotate the lens to adjust the focus.

Try pointing the camera at various objects to see if the program can recognize them!

Note that the model is not always the most accurate.

It generally works better if objects look closer to the original images used in the COCO dataset and under similar lighting conditions. If you are training your own model, you can generally control those aspects to make the model more accurate.

Rather than training from scratch, you can also employ transfer learning where certain variables or layers of a pre-trained model are tweaked to recognize objects from your own dataset. This works best if objects are close to the ones in the original training dataset. We will cover transfer learning in a future tutorial.

Resources and Going Further

I hope this has helped you get started with object detection for your own projects! To assist you, here are a few extra resources that might be worth looking at:

- What is Object Detection?

- EdjeElectronics’ TensorFlow Lite Object Detection on Android and Raspberry Pi GitHub repository

- MobileNet research paper

- COCO dataset research paper

中国

中国