How To Prevent Overfitting in Machine Learning

2023-11-20 | By Maker.io Staff

Overfitting poses a significant challenge to overcome when developing an ML application, as it can lead to poor generalization and decreased performance on unseen data. Therefore, this article investigates some techniques to mitigate the effects of overfitting to build models that generalize better.

The Problem of Overfitting Summarized

Overfitting occurs when an ML model too tightly follows specific patterns in the training data that have no real-world significance, leading to poor overall generalization and model performance on unseen data. No real-world dataset will be perfectly separable, so a good model should cope with the fact that it will incorrectly predict some samples. Developers can detect overfitting in a model during evaluation. A telltale sign for detecting overfitting is the model’s performance significantly differing between the performance on the training and a separate validation or test dataset.

Evaluating Different Algorithms

Some algorithms are more prone to overfitting than others. Complex algorithms that capture intricate relationships between samples are more likely to overfit. Examples of such algorithms include deep neural networks and decision trees. Decision trees, in particular, will practically always overfit the resulting model to the training data when left alone, as they can always add another decision branch to classify outliers during training. The resulting model fits the training samples closely but likely exhibits poor performance when classifying real-world instances. Therefore, evaluating the model choices and comparing their results can help tackle overfitting in the early stages of development. As a general rule, simpler models with fewer tweakable parameters are less likely to capture noise in the data.

Using Cross-Validation to Tackle Overfitting

Holdout involves dividing the dataset into two distinct sets: a training set and a validation set. Model development occurs using the training set, followed by evaluating the model's performance on the validation set. The allocation of instances between these subsets is generally random, resulting in varying outcomes based on how instances are assigned to each group.

To mitigate the variance in accuracy caused by different dataset splits, developers can utilize k-fold cross-validation (CV). This technique segments the dataset into k equally sized parts. The model is then trained and assessed k times using these subsets. For instance, if k is twelve, the original dataset is divided into twelve subsets of equal size. The model is trained eleven times, each time using eleven of these subsets, while the twelfth subset is reserved for testing to measure performance. This ensures that each subset is employed once for testing. The final evaluation of the model's performance is based on the average performance across all twelve test datasets.

This image compares how holdout and 4-fold CV split the same dataset into different subsets for training and testing an ML model.

This image compares how holdout and 4-fold CV split the same dataset into different subsets for training and testing an ML model.

Cross-validation helps address overfitting by providing a more accurate estimate of the model's performance on unseen data and reducing the risk of models performing well only by chance.

Early Stopping

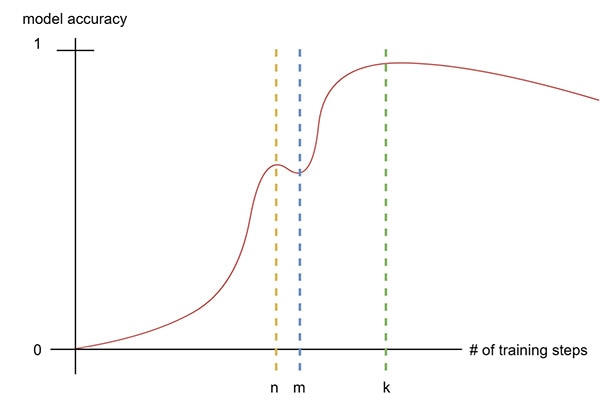

Developers can prematurely stop iterative algorithms, such as gradient descent or decision trees. Doing so can prevent the model from capturing intricate noise in the data that doesn't carry meaningful information. Yet, ending the process too soon may lead to suboptimal performance due to an overly generalized model. To tackle this challenge, developers must determine the optimal stopping point by monitoring the model's performance on a validation set. Training should conclude when the model's validation performance begins to deteriorate. However, it's essential to approach this technique cautiously. The model could plateau at a local maximum, and halting too early might hinder its potential to reach a global optimum.

This graph illustrates how stopping after n training steps would cut off the training process too early, as n doesn’t represent the globally best performance the model can achieve. If the training continued, the model would eventually reach its ideal performance after k steps, which is the appropriate point to end the training process.

This graph illustrates how stopping after n training steps would cut off the training process too early, as n doesn’t represent the globally best performance the model can achieve. If the training continued, the model would eventually reach its ideal performance after k steps, which is the appropriate point to end the training process.

Choosing when to end the training process is not trivial and often depends on previous experience and trying various cut-off points. In addition, developers can apply several heuristics, such as only stopping when the performance degrades over multiple subsequent steps or when the degradation between steps exceeds a specific threshold value.

Utilizing Ensemble Learning Methods

Finally, ensemble learning can be another effective way to tackle ML model overfitting. Ensemble methods don’t use a single classifier to make predictions or perform regression. Instead, they employ multiple classifiers, which can all be of the same type or different classifiers. Random forests are a so-called homogenous ensemble method, utilizing only one type of classifier (multiple decision trees). In contrast, heterogeneous ensembles employ multiple different algorithms, usually in sequence. The ensemble obtains the result by several techniques, such as majority voting (decision) or calculating the average (regression).

Random forests, for example, have the benefit that they train each decision tree on a random subset of the training data. While each tree may overfit the model to its particular subset, thus leading to poor performance in those cases, the combined result of the ensemble is less prone to overfitting, as not all trees will perform poorly on all samples. The trees perform well on average, given the forest is sufficiently large.

Summary

Developers can utilize various tricks and methods to make their ML models less prone to overfitting, and this article summarized a few of the most common ones. Developers can compare different algorithms and choose the one that gives them the best results, assuming their use case allows them to do so.

K-fold cross-validation divides the dataset into multiple subsets and trains the model on some of them while using the rest for validation. This process repeats for each fold, and the average performance is calculated, thus providing a more accurate assessment of the model’s performance by reducing the likelihood that the model performed well solely by chance.

Sometimes, prematurely stopping training prevents sequential algorithms from adding branches that only capture noise in the data. However, developers must pay attention when choosing the cut-off point, as ending too early can prevent the model from reaching its full potential. Applying several heuristics can help choose an appropriate stopping point.

Finally, ensembles can compensate for overfitting by taking the average result of multiple sub-models, some of which may perform poorly on some samples. This technique relies on the fact that not all models in the ensemble perform poorly in all instances they observe.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum

中国

中国