Heterogeneous Processing Enables Machine Vision Applications

投稿人:DigiKey 欧洲编辑

2017-10-05

Industrial imaging and machine vision applications are becoming more commonplace in industrial control as levels of automation increase to support the drive for increased productivity. In order to deliver on the promise of improved efficiency, image processing systems need to operate at high speed to ensure the takt time, the average time between starting the production of each new unit, is kept as short as possible.

At the same time, high-resolution cameras have emerged on the market that are capable of high-definition recording and can support the imaging of extremely fine detail on components on the production line. They are able to deliver these high quality images at high speed thanks to the use of gigabit Ethernet networks. As a result, high performance from the image processing software is critical.

This suggests implementation of the key algorithms in hardware, but flexibility in industrial image processing is crucial. Different types of components and assemblies require specific attention to certain visual features. The inspection for PCBs following assembly will often be to detect breaks and pinching in solder tracks that indicate possible sources of failure. Inspection of an engine manifold may look for misaligned or missing fasteners. Although these tasks may use common modules, such as edge detection and pattern matching to identify areas of interest, each demands its own set of image processing routines to perform the complete inspection task.

Flexibility will become even more important when image processing subsystems are called upon to assist with setting up automation. Image processing is becoming necessary not just for inspection, but to help position robotic systems and train them. Whereas robots would traditionally be programmed using carefully calculated motion profiles, they are now beginning to use cameras and objects within the visual field to align themselves and determine which components they need to pick up and work on. To run such imaging applications, developers need a toolkit of functions that can easily be assembled on a platform with the performance needed to run the algorithms in real time.

A visual toolkit that provides a set of basic functions for image processing of many forms, including industrial, is available in the form of the OpenCV library. Now amounting to some millions of lines of code, the open-source OpenCV library has quickly become a popular choice for image processing in many sectors. By 2016, the SourceForge repository recorded more than 13 million downloads of the library.

OpenCV covers many of the functions required for image processing and machine vision applications, from basic pixel-level manipulation such as thresholding through feature extraction to object recognition based on machine learning techniques such as deep learning. Its functions are available from languages such as C/C++, Java and Python.

Accessed from C++, OpenCV provides its services using classes and functions in the “cv” namespace. For example, to retrieve a stored image and display, the developer first creates a suitable image array by creating an object based on the cv:Mat class. The function cv::imread() is used in an assignment to insert the image data into the array declared using cv::Mat:

cv::Mat image(height, width, pixel_type);

image = cv::imread(“image.bmp”);

A simple operation to mirror the image can be implemented by declaring a new Mat object – “mirrored”, for example – and issuing the command cv::flip(image, mirrored, 1). The integer used in the third position determines the axis around which the image is flipped.

The Mat array provides low-level pixel-based access to the image data to support algorithms that scan the area to look for attributes, such as minimum or maximum values, and to support direct manipulation of the data. But the library also implements higher level filtering functions useful in machine vision such as edge detection filters. For example, the cv::Sobel and cv::Laplacian calls implement a filter to an image that detects sudden changes in pixel brightness by convolving the image data with a 3 x 3 or larger matrix. Execution of the filter on the source arrays produces an array that contains likely edges. Larger kernels are useful for suppressing high frequency noise and to yield actual edges with higher confidence. Thresholding using functions such as the Canny operator can be used to improve the overall quality of results.

Lines detected in the image can be extracted using functions such as cv::HoughLinesP and used to support algorithms that operate on shapes found in the source image. These can be used to determine whether objects expected to be in the image, such as fasteners on an engine casing, are present.

Although OpenCV was designed for compute efficiency, the amount of data streaming from high-resolution cameras can challenge pure software implementations, even those running on high performance multicore SoCs. OpenCV supports implementation of its functions not only on general-purpose processors but also on graphics processing units (GPUs) and even custom hardware. One way to preserve the flexibility of software-based control, yet take advantage of the performance achievable under a hardware implementation, is to employ programmable logic. The Xilinx Zynq-7000 provides a convenient way to access multicore CPUs and programmable logic in a single SoC.

The Zynq-7000 platform combines one or two ARM® Cortex®-A9 processors with the flexible hardware of an embedded field programmable gate array (FPGA) in addition to peripherals that support high-speed I/O. Zynq-7000 devices support two forms of FPGA fabric: one based on the Xilinx Artix-7 architecture and the other on the high-performance Kintex-7 fabric. All are implemented on a 28 nm CMOS process for high logic and memory density in a cost effective form.

As well as flexible programmable logic based on the lookup table concept, both Artix and Kintex fabrics provide digital signal processing (DSP) resources designed for the efficient processing of filters and convolutions. Using slice architecture, the DSP resource is tuned to the device, with Kintex providing a higher ratio of DSP engines to bit-level logic than the Artix. More than 2000 interconnect lines connect the processor complex to the programmable fabric, helping to support the high bandwidths needed to transfer image data between processing modules.

Designers working with the Zynq-7000 platform can use the high-level synthesis (HLS) technology in the Vivado Design Suite to compile algorithms into programmable hardware. Conventional FPGA development is built around hardware description languages (HDLs) such as Verily and VHDL. Although these HDLs share syntactic similarities with C and Ada, respectively, they operate at a lower level of detail than most software developers are comfortable with. Verilog and VHDL are based around bit-level manipulation and combinatorial logic. HLS technology provides the ability to convert algorithms expressed in languages such as C++ into hardware.

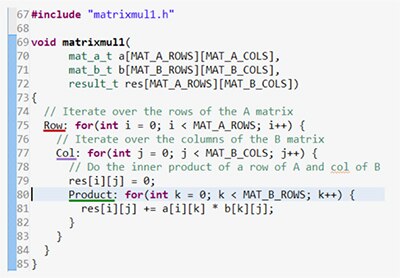

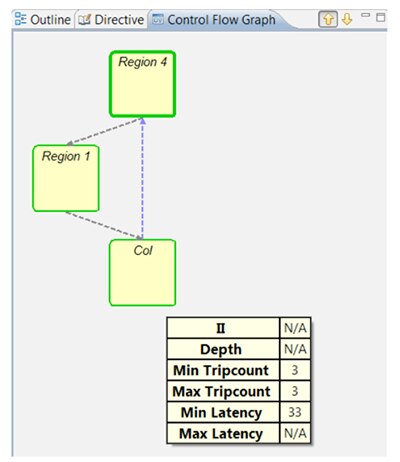

HLS synthesis leverages the object-oriented basis of C++ to let programmers identify opportunities for concurrency, low-level scheduling in their code and to provide direction to the synthesis engine on how to generate hardware from the source code. For example, a programmer creating a nested matrix-multiply operation intended for deployment on the FPGA fabric can identify processing modules for each loop that can be allocated to hardware with their associated scheduling in the Vivado HLS implementation tools. In Figure 1, the Row, Col and Product identifiers indicate output blocks. Figure 2 shows the expansion of the Row block and the Col block it contains, as well as two regions used to move data between the blocks. The control flow loop shows the latency of the synthesized operation.

Figure 1: Nested loop code suitable for FPGA implementation.

Figure 2: Control flow graph for portion of nested loop viewed in Vivado.

To accelerate performance, the designer may unroll loops to allow more functions to take place in parallel if available DSP nodes and lookup table hardware is available. For work in image and video processing, the Vivado environment includes a number of libraries that provide direct support.

The HLS imaging libraries include functions that have very close matches with the OpenCV imgproc module in particular. For example, whereas OpenCV in software would use a cv::Mat declaration to create the array needed to hold an image in memory, there is a corresponding template class, called hls::Mat<> that provides the same core functionality. The equivalent call declaring an array called image would appear as:

hls::Mat < width, height, pixel_type > image ();

Rather than read an image from disk, it will typically be passed from the processor complex into the FPGA fabric using the hls::stream<> datatype. There is a key difference between processing images in processor space and in the FPGA. The hardware fabric is programmed on the assumption that there will be a pipeline of accelerated operations that pass through the logic elements in the form of a stream of pixels. As a result, algorithms that rely on random access to image data need to be converted into a form that can act on linear streams.

Streaming access also implies that if an image is needed by two hardware-mapped functions, it needs to be duplicated. This is typically performed using the hls::Duplicate function. A further difference lies in the treatment of floating point values. OpenCV natively supports floating-point arithmetic, but this is often inefficient on the DSP resources of a typical FPGA. It makes sense to cast all datatypes to fixed-point equivalents and then use scaling conversion once the data is passed back to software routines to maintain compatibility with core OpenCV functions.

The applicability of hardware to a function may determine the partitioning of an application or subsystem. One of the reference designs for OpenCV-compatible processing on the Zynq-7000 platform is one that implements corner detection and the creation of an overlay showing all the corners found in the image. Its structure is shown in Figure 3.

Figure 3: Dataflow structure of the fast corners application example.

As with many machine vision applications, the implementation takes the form of a pipeline. The first function is a greyscale conversion, which can be approximated through the extraction of the green channel from an RGB source. This is followed by corner detection and then a module to draw the corners on the image.

The issue for the application in terms of hardware usage is that the drawing of the overlay in the target image requires random access to the image array, which is not readily supported by a streaming-oriented hardware implementation. The approach that a designer can take is to partition the functions between FPGA and the processor complex. The green channel extraction and corner detection convolution are readily implementable in hardware. The corner drawing functions, which are less computationally intensive, can be performed on the image once it has been streamed back into processor memory across the Zynq-7000’s AXI4 interface.

More sophisticated image processing systems that involve a number of cooperating modules that perform extraction, recognition and other tasks may involve an architecture that is more complex than a fixed latency pipeline. For example, different situations may call for more intensive processing of the image. Exceptions may trigger deeper analysis of parts of the data. These considerations complicate scheduling. The simplest option will be to run the discretionary functions on the processor complex, but if some of the functions are computationally intensive, there is the risk that overall system performance will suffer and the control routines will miss key deadlines. Areas of the FPGA could be reserved for the use of computationally intensive functions, but unless large sections are set aside there is no guarantee that resource will be available under certain conditions. What is needed is a dynamic way to allocate functions between the processor complex and other accelerators such as those able to run on the FPGA fabric.

The Embedded Multicore Building Blocks (EMB2) library provides the support needed for dynamically scheduled heterogeneous systems. Available from GitHub, the EMB2 library provides a set of design patterns for a wide range of embedded real-time applications that need high performance computing support implemented on GPUs, GPUs and programmable hardware, together with C++ wrappers to support environments such as OpenCV.

The EMB2 library conforms to the multicore task management application programming interface (MTAPI), defined by the Multicore Association to ensure high portability across heterogeneous compute platforms. The MTAPI architecture organizes workloads into a group of tasks and queues, with the latter used to implement pipelines of processing through which data elements stream. Tasks provide the organization needed to synchronize multiple work packages with each other.

A key aspect of EMB2 and MTAPI is that they support the notion of being able to use different implementations for the same task. If the scheduler, which forms part of the library, determines a resource is available in the hardware fabric, it will run the version of the code able to instantiate the necessary logic and allow data to be streamed in and out of it. If there is greater headroom on the processor, then a software-only version can be marked ready to run. The developer simply needs to ensure that both forms are available for the scheduler to be able to call. The result is a flexible execution environment for high performance applications and one that fits well with the OpenCV architecture.

Conclusion

Thanks to the combination of flexible open-source libraries and a platform that delivers both high-speed microprocessors and programmable hardware, it is now possible to implement highly efficient machine vision applications suitable for the Industrial Internet of Things.

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。