How to Run Custom Speech-to-Text (STT) and Text-to-Speech (TTS) Servers

2022-05-16 | By ShawnHymel

License: Attribution Raspberry Pi

In a previous tutorial, we created a custom wake word for Mycroft AI. This time, we demonstrate how to set up a speech-to-text (STT) and text-to-speech (TTS) server on a computer that allows Mycroft to perform speech analysis and voice synthesis on a local network.

You can see a walkthrough of this procedure in video form.

Concept

Out of the box, Myrcoft AI must communicate with various services across the internet.

By default, Mycroft AI performs wake word detection (which we covered previously) on the device (e.g. Raspberry Pi). Once the wake word is heard, it streams audio to a speech-to-text (STT) service that returns a string of what was uttered.

Mycroft (on device) performs intent analysis, matching the returned string to a particular skill. It then communicates with the Mycroft backend to validate the skill and perform any necessary Internet-based actions (e.g. fetch current weather conditions). This information is sent back to the Mycroft service on the device.

Finally, Mycroft generates an audio response (which we covered in the first tutorial). To create an audio output, Mycroft uses a text-to-speech (TTS) service. By default, this is the Mimic 1 TTS engine that runs on the Raspberry Pi. It’s a little robotic, but it’s fast and gets the job done. You can read more about the supported TTS engines here: https://mycroft-ai.gitbook.io/docs/using-mycroft-ai/customizations/tts-engine.

If you want to run Mycroft completely locally (within your own local network), you need to host STT, TTS, and the Mycroft backend services.

Mycroft is open source, which means you can run the backend (known as “Selene”) from the following project: https://github.com/MycroftAI/selene-backend. However, it’s configured to set up multiple skills with a skill store for many devices. As such, it’s overkill and complicated for our setup, so we will ignore it for now.

In this tutorial, we will focus on running custom TTS and STT servers on a laptop. Mycroft AI (on the Pi) will communicate with these servers to perform text-to-speech and speech-to-text. It’s not a completely offline Mycroft solution, but it gets us one step closer.

Required Hardware

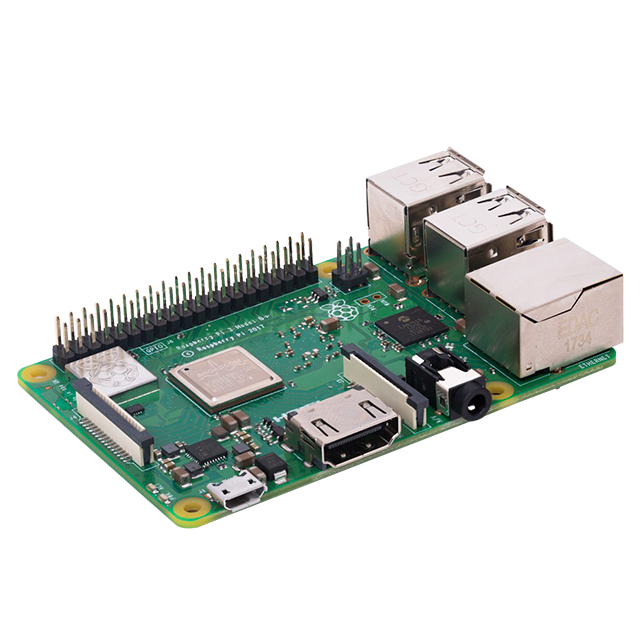

You will need a Raspberry Pi 3B+ or 4B for this tutorial. You will also need a computer capable of running Ubuntu 18.04 (preferably something with a CUDA-enabled graphics card if you want to speed up computation times).

CUDA Installation

If you’d like to use CUDA, check the compatibility chart here to make sure that your graphics card is capable of running CUDA 10.1 (we specifically want this version for our TTS and STT functions). If you do not want (or can’t run) CUDA, just skip this section.

I tested this on Ubuntu 18.04 LTS. Other versions of Ubuntu may or may not work. Ubuntu 18.04 came with Python 3.6.9, which also worked. My laptop has an Nvidia GTX 1060 Mobile graphics card, which worked for this guide.

See here for a guide on installing Ubuntu. You will want to install this on a computer that will act as a server for your TTS and STT services. When you’re installing Ubuntu, do NOT install third-party apps! This will likely install non-compatible versions of the Nvidia drivers.

When you first boot into Ubuntu, update your software but do not upgrade to Ubuntu 20. If you’re using a laptop like me, you’ll want to disable sleep on lid close. I also installed an SSH server so I could log into the laptop via multiple terminals.

To start the CUDA installation process, check your graphics card version (and make sure it has CUDA support in the chart linked above):

lspci | grep -i nvidia

Make sure you have gcc:

gcc –version

Next, install Linux headers:

sudo apt-get install linux-headers-$(uname -r)

Add the Nvidia package repositories:

wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-repo-ubuntu1804_10.1.243-1_amd64.deb

sudo apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/7fa2af80.pub

sudo dpkg -i cuda-repo-ubuntu1804_10.1.243-1_amd64.deb

sudo apt-get update

wget http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64/nvidia-machine-learning-repo-ubuntu1804_1.0.0-1_amd64.deb

sudo apt install ./nvidia-machine-learning-repo-ubuntu1804_1.0.0-1_amd64.deb

sudo apt-get update

Then, install the nvidia driver. Fighting with Nvidia drivers on Linux is the stuff of legends and nightmares. This one worked for me for my graphics card and CUDA 10.1.

sudo apt-get install -y --no-install-recommends nvidia-driver-430

sudo reboot

Check your driver:

nvidia-smi

Next, install the CUDA libraries (you can read about the CUDA 10.1 installation process here):

sudo apt-get install -y --no-install-recommends cuda-10-1 libcudnn7=7.6.4.38-1+cuda10.1 libcudnn7-dev=7.6.4.38-1+cuda10.1

Install cuDNN (you can read about the cuDNN installation process here):

tar -xvf cudnn-10.1-linux-x64-v7.6.5.32.tgz

sudo mkdir -p /usr/local/cuda/include

sudo cp cuda/include/cudnn*.h /usr/local/cuda/include

sudo mkdir -p /usr/local/cuda/lib64

sudo cp -P cuda/lib64/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn*.h /usr/local/cuda/lib64/libcudnn*

Finally, update your path and environment variables:

nano ~/.bashrc

Copy in the following at the bottom of that document:

export LD_LIBRARY_PATH="$LD_LIBRARY_PATH:/usr/local/cuda/lib64:/usr/local/cuda-10.2/lib64:/usr/local/cuda/extras/CUPTI/lib64"

export CUDA_HOME=/usr/local/cuda

export PATH="$PATH:/usr/local/cuda/bin"

Save and exit. Rerun the file to set the variables in the current session:

source ~/.bashrc

When you’re done, check the current CUDA install. It should be v10.1.

nvcc --version

Install Speech-to-Text Server

DeepSpeech is an open source speech-to-text project maintained by Mozilla. We will use it as our STT service.

If you don’t already have pip and virtualenv, you will need to install them:

sudo apt install python3-pip

sudo pip3 install virtualenv

Next, create a directory and virtual environment for DeepSpeech:

mkdir -p ~/Projects/deepspeech

cd ~/Projects/deepspeech

virtualenv -p python3 deepspeech-venv

source deepspeech-venv/bin/activate

Install DeepSpeech and DeepSpeech server. Note: if you are not using CUDA, then install “deepspeech” instead of “deepspeech-gpu.”

python3 -m pip install deepspeech-gpu deepspeech-server

Download the DeepSpeech model files:

curl -LO https://github.com/mozilla/DeepSpeech/releases/download/v0.9.3/deepspeech-0.9.3-models.pbmm

curl -LO https://github.com/mozilla/DeepSpeech/releases/download/v0.9.3/deepspeech-0.9.3-models.scorer

Download a sample audio file and test DeepSpeech:

curl -LO https://github.com/mozilla/DeepSpeech/releases/download/v0.9.3/audio-0.9.3.tar.gz

tar xvf audio-0.9.3.tar.gz

deepspeech --model deepspeech-0.9.3-models.pbmm --scorer deepspeech-0.9.3-models.scorer --audio audio/2830-3980-0043.wav

You should see “experience proves this” printed in the output. If you listen to 2830-3980-0043.wav, you’ll hear someone saying those words.

Let’s create a configuration file for the DeepSpeech server:

nano config.json

In that file, copy in the following (change <username> to your username):

{

"deepspeech": {

"model" :"/home/<username>/Projects/deepspeech/deepspeech-0.9.3-models.pbmm",

"scorer" :/home/<username>Projects/deepspeech/deepspeech-0.9.3-models.scorer",

"beam_width": 500,

"lm_alpha": 0.931289039105002,

"lm_beta": 1.1834137581510284

},

"server": {

"http": {

"host": "0.0.0.0",

"port": 5008,

"request_max_size": 1048576

}

},

"log": {

"level": [

{ "logger": "deepspeech_server", "level": "DEBUG"}

]

}

}

Save and exit. You can run the server with the following:

deepspeech-server --config config.json

Try sending the test audio file to the server:

curl -X POST --data-binary @2830-3980-0043.wav http://0.0.0.0:5008/stt

If that works, you can create a unit file to run the server on boot with systemd:

sudo nano /etc/systemd/system/stt-server.service

In that file, enter the following (once again, change <username> to your username):

[Unit]

Description=Mozilla DeepSpeech running as a server

[Service]

ExecStart=/home/<username>/Projects/deepspeech/deepspeech-venv/bin/deepspeech-server \

--config /home/<username>/Projects/deepspeech/config.json

[Install]

WantedBy=multi-user.target

Save and exit. Load and enable the server (to run on boot), and then run it:

sudo systemctl daemon-reload

sudo systemctl enable stt-server.service

sudo systemctl start stt-server.service

You can check the status with:

systemctl status stt-server.service

You can stop and disable the service with the following:

sudo systemctl stop stt-server.service

sudo systemctl disable stt-server.service

You can watch the output of the service with:

journalctl --follow -u stt-server.service

Your STT server should now be running on your computer!

Install Text-to-Speech Server

We will be using the Coqui TTS server, which is a fork of the Mozilla TTS project with a server wrapper.

As with STT, we want to create a separate virtual environment:

mkdir -p ~/Projects/tts

cd ~/Projects/tts

virtualenv -p python3 tts-venv

source tts-venv/bin/activate

Install the TTS package:

python3 -m pip install tts

You can test TTS with (set use_cuda to false if you do not have CUDA installed):

python -m TTS.server.server --use_cuda true

Ctrl+c to stop the server. This will download and use default models. You can list available models with the following:

tts --list_models

You can learn more about the TTS and vocoder models here.

If you would like to use a particular model and listen on a particular port, you can do so with the following command:

tts-server \

--use_cuda true \

--port 5009

--model_name tts_models/en/ljspeech/tacotron2-DDC

Open a browser and point to your server’s IP address. For example, navigate to http://0.0.0.0:5009. You should be greeted with the Coqui page. Feel free to type some string into the input bar and press “Speak.” If your speakers are working, you should hear your string spoken out to you.

Next, let’s create a unit file for systemd for our TTS server:

sudo nano /etc/systemd/system/tts-server.service

Copy in the following (change <username> to your username):

[Unit]

Description=Coqui TTS running as a server

[Service]

ExecStart=/home/<username>/Projects/TTS/tts-venv/bin/tts-server \

--use_cuda true \

--port 5009 \

--model_name tts_models/en/ljspeech/tacotron2-DDC

[Install]

WantedBy=multi-user.target

Save and exit. Load and enable the service. Then, run it:

sudo systemctl daemon-reload

sudo systemctl enable tts-server.service

sudo systemctl start tts-server.service

Check the status with:

systemctl status tts-server.service

You can stop and disable the service with:

sudo systemctl stop tts-server.service

sudo systemctl disable tts-server.service

Watch the output with:

journalctl --follow -u tts-server.service

Your TTS server should now be running on your computer!

Configure Mycroft

Make sure that your STT and TTS servers are running on your computer. Also, make sure that your Mycroft device (e.g. your Raspberry Pi) is connected to the same network as the computer running your STT and TTS services.

Log into Mycroft and exit out of the CLI client (ctrl+c). Enter the following:

mycroft-config edit user

Add the “stt” and “tts” sections to have Mycroft point to our new STT and TTS servers. Here is an example of a full config file. Note that I have “hey jorvon” as a custom trained wake word model from a previous tutorial, so your “wake_word” and “hotword” section might be different.

{ "max_allowed_core_version": 21.2, "listener": {

"wake_word": "hey jorvon"

},

"hotwords": {

"hey jorvon": {

"module": "precise",

"local_model_file": "/home/pi/.local/share/mycroft/precise/hey-jorvon.pb",

“threshold

"sensitivity": 0.5,

"trigger_level": 3

}

},

"stt": {

"deepspeech_server": {

"uri": "http://192.168.1.209:5008/stt"

},

"module": "deepspeech_server"

},

"tts": {

"mozilla": {

"url": "http://192.168.1.209:5009"

},

"module": "mozilla"

}

}

Save and exit. Restart the Mycroft services:

mycroft-start all restart

Start the Mycroft CLI client:

mycroft-cli-client

With any luck, Mycroft should be using your new TTS and STT servers!

If you need debugging output, Mycroft logs can be found in /var/log/mycroft/.

Going Further

See the following guides if you need additional information about STT, TTS, or Mycroft:

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.

Visit TechForum

中国

中国