Develop Powerful Event-Driven Video Surveillance Systems with Efficient MCUs

投稿人:DigiKey 北美编辑

2016-05-12

When it comes to video surveillance, designers and their customers have long been forced to settle for extremely inefficient systems that rely on "dumb" bulk image capture and archive, with the overwhelming majority of the recorded content of no interest, and the content of interest itself being too difficult to pinpoint in the archive, assuming it has been archived. Now, however, powerful, power-efficient and cost-effective processors, image sensors, and memory devices, combined with increasingly sophisticated software, provide system developers with the opportunity to incorporate valuable computer vision-processing capabilities into applications ranging from consumer surveillance systems to wearable "lifeblogging" cameras.

Intelligent, event-driven, video surveillance only record images when, for example, a person or other object of interest enters the frame, and only for as long as the object remains in the frame. Such autonomous intelligence might historically have only been available in expensive, bulky, power-thirsty equipment used by government, military and other high-end customers, now it can be delivered at consumer-friendly prices, with long battery life, and in a form factor small and light enough to aesthetically sit on a shelf.

What can you do with the potential delivered by today's vision processing hardware and software? Here are some ideas, based on a consumer surveillance system case study:

- An elementary design might begin recording whenever it sensed motion in the frame, and for a fixed amount of time. A slightly more elaborate approach would variable-length record until it was discerned that object motion had ceased and/or the object had disappeared from the frame.

- Such an approach, however, might generate lots of "false positives" caused by blowing leaves, passing-by vehicles, and the like. If warm-blooded animals are the only objects of interest, therefore, you might want to supplement the visible-light camera with an IR detector or other thermal sensor. More generally, available algorithms will let you fine-tune your object "trigger" for size, color, distance, movement rate, and other threshold parameters.

- What if discerned humans are all that you care about? A face detection function can assist in this regard. You might even be interested in triggering the camera whenever a person enters the frame...unless that person is yourself, your spouse, one of your kids, the mailman, etc. For this, you'll need more robust facial recognition facilities.

The OpenCV Computer Vision Library

This project, as is typical, begins with (and is fundamentally constrained by) its software definition and development schedule. Computer vision has mainly been a field of academic research over the past several decades; as such, there isn't yet a large, mature repository of industry expertise in this particular field. Additionally, academic experiments tend not to be broadly applicable to real-world implementations where, for example, ambient lighting and weather conditions can vary widely from one usage situation to another, as well as deviating from the more controlled conditions found in the research laboratory.

Fortunately, and as usual, the open source community comes through with resource assistance. The OpenCV (open-source computer vision) library originated at Intel's research division; the company formally handed it over to the public at the 2000 CVPR (IEEE Computer Vision and Pattern Recognition, a well-known computer vision conference). In beta for its first half-decade, OpenCV gained v1.0 "gold" status in 2006, followed by v2.0 three years later and v3.0 in mid-2015 (v3.1 is the latest release, as of last December).

OpenCV, released under a BSD license, is free for both academic and commercial use. Written in optimized C/C++, it has C++, C, Python and Java interfaces and supports Windows, Linux, Mac OS, iOS and Android operating systems. Notably for this particular surveillance camera project, the library contains more than 2,500 algorithms, including those that can find use in identifying objects as well as tracking them, as well as detecting and recognizing human faces and classifying human actions.

Microchip Technology's PIC32MZ EF Series MCUs

One potential downside to using OpenCV, however, bears mentioning. The library's Intel- and PC-centric origins are reflected in the fact that much of the foundation code contained within it is floating point-based, which can be problematic for some fixed-point-only embedded system designs. Truth be told, most computer vision functions don't even require floating point precision. As such, some processor suppliers have developed architecture-tailored versions of some or all of the OpenCV library, tackling floating- to fixed-point conversions of the code along with offering other optimizations. However, if you're stuck doing the conversions yourself, the effort may be cost- and schedule-prohibitive.

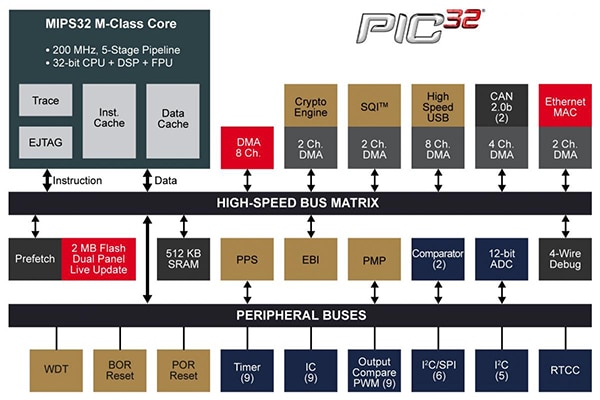

Microchip's new PIC32MZ EF MCUs offer a straightforward alternative solution to the OpenCV floating-point problem (Figure 1). At their core is a high performance 32-bit MIPS microAptive processor running at up to 200 MHz and capable of tackling a diversity of computer vision functions. Also, reflective of the "EF" suffix in the 48-member product family name, Microchip has also embedded a 32- and 64-bit IEEE 754-compliant seven-stage FPU alongside the integer CPU, capable of running floating point OpenCV code unchanged and at high speed.

Figure 1: The combination of a high performance CPU and a 32- and 64-bit FPU coprocessor makes Microchip's PIC32MZ EF MCUs a compelling candidate when using open source code. (Image courtesy of Microchip Technology)

Other useful aspects of the PIC32MZ EF include its integrated 10/100 Mbit Ethernet MAC and a host of system interfaces (variety and amount are to some degree package- and pinout-dependent... the MCU family comes in multiple options). The MAC, in combination with an external PHY, can support the surveillance camera's network connectivity needs either directly (if wired Ethernet is your networking technology of choice) or indirectly via an external wired-to-wireless Ethernet bridge. Alternatively, you can accomplish wireless cellular and/or Ethernet connectivity by means of an external transceiver connected to a PIC32MZ EF USB 2.0 or other interface port.

Image Sensor Alternatives

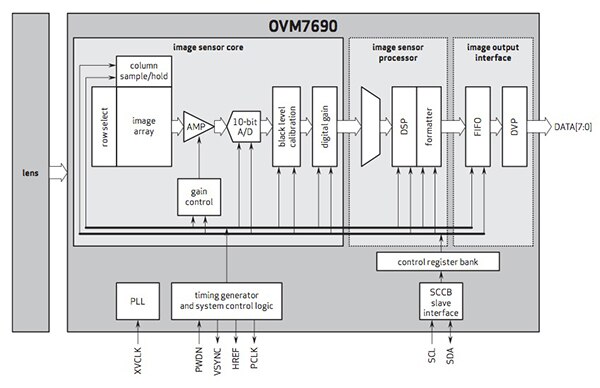

The previously mentioned MCU interface diversity is beneficial not only in delivering network connectivity variety but also image sensor flexibility. One straightforward means of connecting a camera to the PIC32MZ EF involves incorporating an OmniVision Technologies OVM7690 VGA-resolution camera module in the design, connected to the MCU via an 8-bit I/O port (Figure 2). This approach is beneficial for a few key reasons; the OVM7690 already contains wafer-level optics in the form of a 64° field of view (diagonal), F/3.0 lens, for example, so you don't need to deal with adding a separate optics subsystem to your design. Also, the OVM7690 embeds a dedicated image processor, thereby unburdening the PIC32MZ EF from image pre-processing tasks such as de-mosaicing, rescaling, format conversion and exposure control.

Figure 2: An integrated camera module touts design simplicity (top) but the images it delivers, while pleasing to the eye, may be less amenable to computer vision processing than the unprocessed outputs of a conventional image sensor (bottom). (Images courtesy of OmniVision Technologies and ON Semiconductor, respectively)

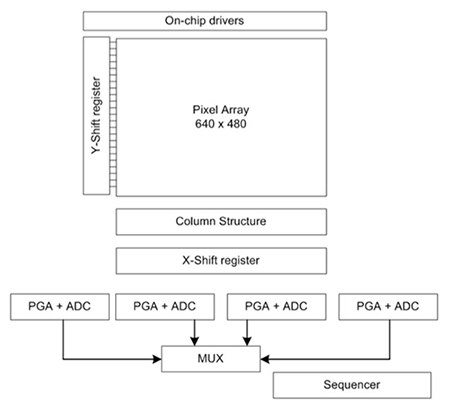

However, plausible scenarios exist that may compel you to instead go with a conventional image sensor such as ON Semiconductor's VGA-resolution NOIL1SM0300A in combination with a lens of your own design and connected to the PIC32MZ EF via one of its SPI ports. First and foremost, images that are pleasing to the human eye may conversely be seen as detrimental to a computer vision-processing algorithm. For example, edge enhancement automatically done by an image preprocessor might result in artifacts that complicate the task of differentiating an object from its background. The same goes for automatic exposure control, white and black level balance, color correction, and similar tasks commonly done by default by the image coprocessor built into a camera module.

You might also, for example, require a different lens focal length and/or aperture than what's provided by the sensor module manufacturer. Regardless of whether you go with an integrated camera module or a standalone image sensor, however, you'll likely find that cost-effective VGA resolution product options will suffice; sometimes, an even less expensive QVGA or CIF resolution product will even be all you'll need. The only times you might need to exceed 3 Mpixels in resolution are if you're attempting to discern an object far in the distance, or under particularly poor ambient viewing conditions, both cases benefitting from greater source image detail. You may also choose a higher resolution image sensor or camera if your target customer insists on viewing "HD" video, regardless of whether the computer vision software requires it.

Local Mass Storage

Recall that the fundamental objective of this project is to record video only when an event of interest occurs that is "seen" by the camera, and only for as long as that event continues. In doing so, the implementation minimizes the required capacity of flash memory or other storage technology required for the design (not to mention saving precious battery life in the process). Still, while the 512 KBytes to 2 MBytes of flash memory, along with 128 KBytes to 512 KBytes of RAM integrated within various PIC32MZ EF MCU family members, may suffice for nonvolatile code storage and transient data storage purposes, higher-capacity external storage for the video clips themselves will still be necessary.

You could always go with a standalone NAND flash memory device (or a few of them), of course, mated to the MCU via an I/O bus. However, you'd need to develop your own media management software, to handle background "garbage collection" cleanup of flash memory erase blocks that have become filled with valid and/or retired video data, for example, as well as to wear-level the media in order to prevent "hot" over-cycling of some erase blocks versus others. Plus, this media management would need to be handled by the MCU itself, thereby consuming precious processor cycles that could otherwise be devoted to computer vision processing and other tasks.

Instead, consider using a flash memory mass storage solution that includes its own media management controller. Options include removable SD cards (and smaller mini SD and micro SD siblings), along with Micron Technology's BGA-packaged e.MMC NAND flash memory; both options interface to the PIC32MZ EF MCU via a few-pin I/O bus (Figure 3). Depending on the captured frame resolution, frame rate and compression format, for example, Micron's 32 GByte e.MMC should enable you to store many dozens of minutes' to multiple hours' worth of video. Additionally, via email, text message or other alert, you can communicate the capture status of new video (along, optionally, with some-to-all of the video itself) to the surveillance system owner; the video will be retained within the camera for subsequent review, archive, and/or deletion.

Figure 3: A flash memory mass storage solution with an integrated media management controller both free up the system processor to handle other tasks and simplify your software development efforts. (Image courtesy of Micron Technology)

Conclusion

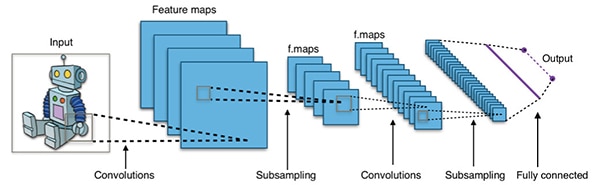

This project description admittedly isn't all-encompassing; the AC/DC and DC-stepping power subsystem will still need to be added, for example, and you might also want to include a microphone and ADC in order to record audio along with the images. However, it covers some key pieces of the design. The more complex each algorithm, and the more of them you combine, the more likely you'll end up overloading the processing potential of the PIC32MZ EF's CPU and FPU. With that said, new algorithms such as the emerging convolutional neural network "deep learning" technique for object identification (Figure 4), along with optimizations of existing algorithms are appearing all the time.

Figure 4: Convolutional neural networks (CNNs) and other "deep learning" approaches, once trained with a series of reference images, have been shown to deliver impressive object recognition results at the tradeoff of substantial processing and memory requirements. (Image courtesy of Wikipedia)

Field tests in abundance prior to production are highly recommended; inevitably, you'll encounter ambient conditions and usage scenarios that you didn't think about during product development, which will necessitate algorithm fine-tuning. Implementation nuances aside, the combination of a cost-effective processor such as the PIC32MZ EF MCU running open-source software like OpenCV, with images captured by a sensor or camera module, stored to resident flash memory and transferrable over a network connection, creates all sorts of interesting applications: both enhancements of existing products and brand new product categories. We'll have more on CNNs at a later date. In the meantime, have fun, and good luck!

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。

中国

中国