3M Twin Axial Cable Solution Case Study

2015-09-15

Situation

In 2014, Dell set out to achieve new benchmarks in high-powered computing (HPC) server performance, density, reliability and cost. To realize its goals, Dell knew it had to produce the most powerful package in the smallest thermally-efficient envelope possible. Plus, its new line of servers had to reach the market at breakneck speed.

Dell chose 3M to help make it happen. Working side-by-side, Dell and 3M engineers brought to life Dell’s powerful new PowerEdge™ C4130 rack server design by leveraging 3M’s unique, assembly-ready, low-profile PCIe cabling from 3M’s Twin Axial Cable product line. From concept to production, the latest in Dell’s PowerEdge Server Portfolio broke new ground.

“We had very different sets of challenges from those of normal cable development,” says Corey Hartman, concept architect and mechanical engineer at Dell. “It required collaboration between the two companies because we had very specific mechanical, thermal, and electrical goals. We wanted to work with 3M to make sure we could manufacture it and still meet those very special design goals.”

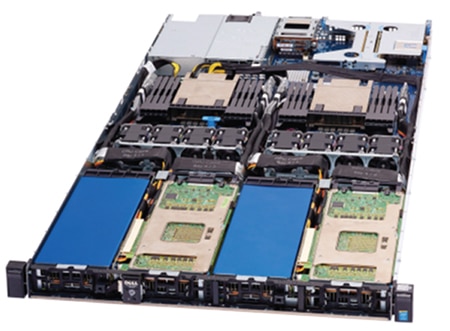

Figure 1: Dell’s new PowerEdge™ C4130 rack servers are the latest in Dell’s PowerEdge Server Portfolio, and serve as a purpose-built solution to support HPC environments within the scientific and medical research, financial, industrial and data-intensive computing fields. Dell’s PowerEdge Server Portfolio uses 3M’s Twin Axial Cable Assembly to optimize airflow for better cooling to maintain the high speeds and processing power HPC environments demand.

GPU Density Key

At the heart of high-performance computing systems are graphic processing units (GPUs). Capable of executing millions of commands a second, faster and faster GPUs render scientific, engineering and design calculations breathlessly. Without ultra-fast GPUs, today’s cloud computing, virtualization and 3-D visualization would be impossible. Universities, oil and gas exploration, and big business all rely on GPUs for processor-heavy applications.

High-bandwidth, high-power GPUs, if used in tandem and with the right server architecture, can unleash incredible increases in processing power and performance. However, they must be packaged densely to achieve HPC rack scale solutions, and help researchers and users of all different sizes of datacenters. Additionally, the architecture must be flexible to meet varying demands of HPC workloads. Fitting more GPUs per server and more GPUs per rack are a significant advantage to an HPC architect.

“Customers don’t go out and shop for servers in this market – they have a workload that requires a certain number of GPUs,” Hartman says. “So they actually start their search by calculating how many GPUs they need to achieve their goals, and then how many racks and servers and other infrastructure they have to purchase to house that amount of GPU computational power.”

“First, the fact that you can get more GPUs in one server means that you can buy fewer servers to accomplish your workload,” Hartman says. “This means lower operating expenses to meet your goals. Second, a dense server with front GPUs, potentially far away from CPUs, could offer incredible thermal and performance advantages. Lastly, we needed an architecture that was flexible enough to meet the extremely varying configurations of the system’s PCI bandwidth, to meet a wide array of software architecture used in HPC segments. These were our key goals.”

The new server design required a high degree of coordination between the mechanical and electrical teams at both Dell and 3M. Product engineers at 3M engaged Dell’s teams in person, virtually and by employing 3-D printing technology and multiple production mockups to validate product fit. Dell and 3M had to demonstrate feasibility and simultaneously move rapidly to market.

“Dell’s schedules are always aggressive, but this time their goal was unusually aggressive,” says Mark Lettang, interconnect applications engineer with 3M Electronics Materials Solutions Division. “We first met on the project in January and the release date was set for December. Dell needed production-quality material by August. It’s an understatement to say this was breakneck speed.”

3M Solution

Dell chose to lead the market by placing four high-powered GPUs adjacent to each other in the very front of a 1U rack server, which is just 1.75 inches tall and 3 feet long. 3M™ Twin Axial Cables made the connections possible.

3M™ Twin Axial Cable Assemblies are not like conventional twin axial cables – they are in a class of their own. 3M Twin Axial Cable is the industry's first low-profile, foldable and longitudinally shielded high-performance twin axial ribbon cable that can make sharp turns and fold with little or no impact on electrical performance. The 3M cable outperforms conventional cable constructions in bend radius, signal integrity, ease of termination and overall routability.

By positioning the GPUs upfront where fresh air enters the server rack, Dell’s PowerEdge™ C4130 rack server offers the best possible architecture for GPU performance and reliability. 3M™ Twin Axial Cable assemblies can be designed with folds that minimize airflow obstruction and, therefore, help reduce the cost, complexity and power consumption of cooling the system. It can snake between processors, heatsinks and fans, freeing up valuable space in the mechanical design of the unit for components or air flow.

Figure 2: Dell’s PowerEdge Server Portfolio uses 3M’s Twin Axial Cable Assembly to optimize airflow for better cooling to maintain the high speeds and processing power HPC environments demand.

“We are able to architect a GPU-dense box with ambient air at the GPUs, as opposed to pre-heated air that typical GPU systems provide to some or all of their GPUs,” Hartman says. “That differential provides the best possible performance and reliability in your datacenter environment.”

Dell evaluated various approaches, but identified 3M as a leading candidate early on, Hartman says. “3M’s cable media could be folded and bent to dodge mechanical obstacles. Other media has much higher losses that would have required us to change our motherboard approach to make up for those losses. This could have ended up blocking other key server features.”

3M™ Twin Axial Cable is less than 0.9 mm thick and pliable. Industry-standard discrete pair internal high-speed cable assemblies typically found in HPC servers are bulky— with some simple PCIe four-channel assemblies having a cable bundle thickness as high as 6 – 12 mm.

Figure 3: The ultra-flat, foldable ribbon design of the 3M™ Twin Axial Cable Assembly optimizes airflow for better cooling to maintain the high speeds and processing power HPC environments demand.

Tests show that each fold (180-degree fold at 1 mm bend radius) impacts impedance at the fold location by approximately 0.5 ohms (70 ps 20/80 percent rise time), well within the tightest impedance specification. The cable is suitable to be used in current and emerging high-speed serial transmission standards, such as SAS, PCI Express, Ethernet, InfiniBand®, and more.

“We knew that our layout required a unique mechanical packaging and we knew that it would require more advanced riser technology,” Hartman says. “We couldn’t fit conventional cables as our PCI bus in this very challenging envelope, but the 3M technology was able to fit within that. It gave us the flexibility to come up with different configurations. It’s allowed different data path options.”

GPUs at the front of the server are also easier to service because they are much easier to access in the rack. Dell’s customers reported monitoring, servicing and/or upgrading GPUs more often than any other components in their HPC environment, so the new design would present immediate advantages.

Design Behind-the-Scenes

To customize the special 3M™ Twin Axial Cable technology for the PowerEdge™ C4130 rack server, 3M engineers combined physical design with pre-production modeling and proof of mechanical fit. The process started with understanding the spatial demands of Dell’s server envelope.

“Working with an assembly pinout, PCB designs and a mechanical server model, we worked closely with Dell engineers to devise solutions which minimized airflow disturbance, while also considering assembly manufacturability (i.e. DFM),” 3M’s Lettang says. “Maintaining the required pinout for multi-ribbon assemblies through many folds can be non-trivial – there are a few hundred pinout combinations for a four-ribbon assembly, and only one of them is correct.”

Close cooperation led to success early on.

“We had 3M on-site for brainstorming meetings,” Hartman says. “I think one of the great benefits of having 3M on site was that we were able to try out many different ideas within each session. We kept peeling the onion to solve the challenges we found.”

Once the proper pin-outs were established, 3M engineers progressed to a mechanical model that could test the cable design’s physical fit. Initially, Dell provided 3M access to a prototype server to refine cable assembly mock-ups, but later in the process 3M employed 3-D printers to create the allowable routing envelope. These allowed 3M to test and perfect design iterations more quickly. Today, 3M can often explore cable routing options starting with just a CAD file.

Figure 4: The low-profile capabilities of 3M™ Twin Axial Cable Assemblies save a substantial amount of internal space and help alleviate congestion inside dense server systems—giving design engineers considerably more room for server architecture options.

“In some areas, there are eight ribbons stacked on each other,” Lettang says. “So the thickness of a ribbon is particularly critical. The thinness of our twin ax ribbon was key to the solution.”

“After the layout was determined, 3M Twin Axial Cable design advantages helped speed the manufacturing process,” added Hartman. “Because 3M’s cable retains its shape, it can be placed and installed much like any other component. We couldn’t fit conventional cables as our PCI bus in this very challenging envelope, but the 3M technology was able to fit within that. It gave us the flexibility to come up with different configurations.”

“You drop it in like a mechanical part,” Hartman says. “When you’re building a system, it’s actually easier for the builder because they’re not having to route complex cables with lots of bends; they are really just dropping them in as a static part.”

Results

Dell’s PowerEdge™ C4130 rack server hit the market on spec and on time. It also broke new ground in server density, thermal stability and serviceability.

“What we’ve done is over-designed the GPUs well above spec,” Hartman says. “3M’s cabling was key to PowerEdge™ C4130 rack server achieving its key design goals. With 3M, we’ve packed an incredibly powerful system into a very small space. We are able to realize cooling temperatures of 25-35°C – that’s 15 degrees lower than the air at the rear of the box. That differential provides performance and reliability benefits.”

3M provides a wide array of innovative products and systems that enable greater speed, brightness and flexibility in today's electronic devices, while addressing industry needs for increased thinness, sustainability and longevity. Using the most recent R&D advances in materials and science, 3M offers technology, materials and components to create exceptional visual experiences; enable semiconductor processes and consumer electronics devices, and enhance and manage signals. 3M enables the digitally enhanced lifestyle of today and tomorrow.

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。