ASCII: The History Behind Encoding

2024-05-17 | By Antonio Velasco

ASCII (American Standard Code for Information Interchange) and binary are two fundamental concepts in the world of computer science and digital communications. Understanding the relationship between these two is crucial for anyone delving into computing, programming, and data processing. Let's explore this relationship in detail, while also briefly touching upon other similar text encoding systems.

What is ASCII?

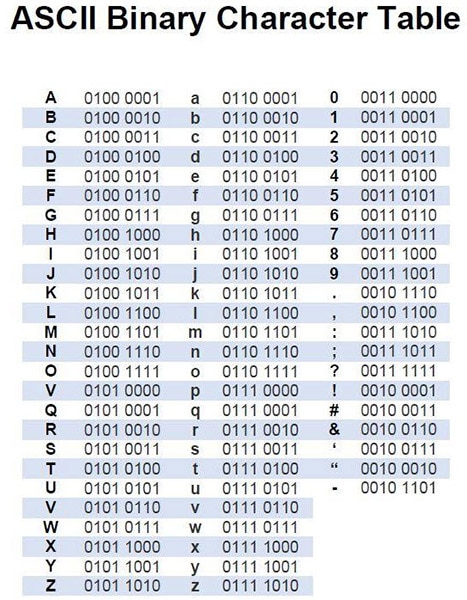

ASCII (sometimes pronounced aahs-kee by the youngins today) is a character encoding standard designed in the 1960s that assigns a unique number to each character, such as letters, digits, and symbols. Originally designed using a 7-bit binary number, ASCII can represent 128 unique characters. This set includes control characters (like carriage return or line feed) and printable characters (like letters, digits, and punctuation marks). For example, in ASCII, the uppercase letter 'A' is represented by the decimal number 65, which is 1000001 in binary.

You can see the above ASCII table to get a general idea of what translates to what.

In computing, ASCII serves as a bridge between human-readable text and the binary language of computers. When text is stored or processed in a computer, each ASCII character is converted into its corresponding 7-bit binary code. This conversion is straightforward: the ASCII standard provides a table that maps each character to a binary number. This process ensures that text data is efficiently stored and processed in the binary-driven architecture of computers.

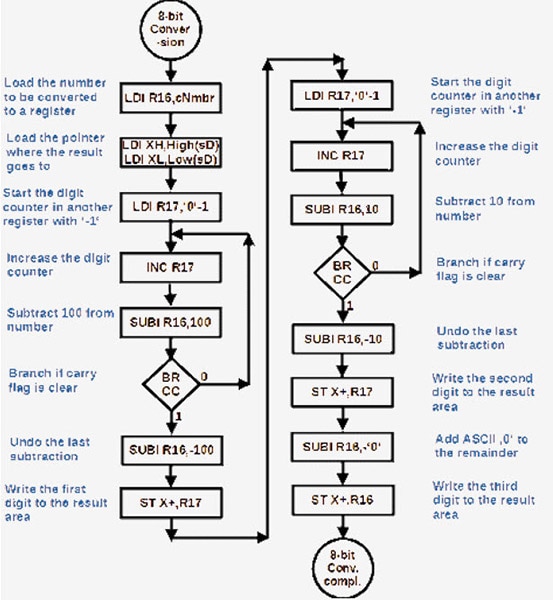

You can see the above flowchart which depicts how an 8-bit binary input is converted into an ASCII string. It's a little bit complicated--especially as the formatting can get weird--but the main thing is that the output will consistently be tied to whatever the input is.

If you're interested in learning more about how to translate between binary and ASCII, The Organic Chemistry Tutor has a really great video on how to do it.

If So Complicated, Why ASCII?

One of the significant advantages of ASCII is the consistency it brings to computer systems. Before ASCII, different computers had different ways of representing characters, leading to compatibility issues. ASCII standardized text encoding, ensuring that a piece of text encoded on one system could be read and understood on another. Think of it like if there was a brand-new language, X, that nobody's ever heard of except for one specific other language, Y. Say that we have our own language, Z, which we would like to translate to X. However, since our language Z has never heard of X, there is no way for us to translate from Z to X. Fortunately, Z can be translated to Y, and from there we can translate to X. In this case, Y is ASCII, serving as a common language for systems to talk to each other. This standardization has been fundamental in the development of digital computing and communication.

While the original ASCII uses 7 bits for each character, extended ASCII versions use 8 bits (or one byte), allowing for 256 different symbols or characters. This expansion provides room for additional characters, including accented letters, graphic symbols, and different glyphs used in non-English languages. However, even with extended ASCII, the limitations in language support and character sets were apparent, leading to the development of other encoding systems.

Binary, the base-2 numeral system, is the fundamental language of computers. It uses only two symbols, 0 and 1, to represent all possible data. In the context of text encoding, binary is used to represent the ASCII codes. This relationship between ASCII and binary demonstrates how human-readable information is translated into a format that computers can store and process.

For programmers and data scientists, understanding the ASCII-binary relationship is essential. ASCII provides a predictable and standardized way to encode text into binary, which can then be manipulated, stored, or transmitted by computer systems. From simple text editing to complex data processing, the seamless conversion between ASCII and binary underpins many computing operations.

But We Don't Use ASCII Today, Do We?

While ASCII was revolutionary, it had its limitations, notably in its lack of support for non-English characters and symbols. This limitation led to the development of other encoding systems.

Unicode is the most widely used encoding standard today. It addresses the limitations of ASCII by providing a unique number for every character, no matter the platform, program, or language. Unicode supports a wide array of characters and symbols from various languages, making it a truly global standard.

UTF-8 is a variable-width character encoding capable of encoding all 1,112,064 valid character code points in Unicode using one to four 8-bit bytes. It's backward compatible with ASCII but can also represent every character in the Unicode character set.

ISO/IEC 8859: Known as Latin-1, this is a series of 8-bit character encodings for Western European languages. It extends the ASCII set to include characters necessary for languages like French, German, and Spanish.

In summary, the relationship between ASCII and binary is a key foundational concept in the field of computing, bridging human-readable text with the binary language of computers. ASCII's development marked a significant step in standardizing text encoding, paving the way for more advanced systems like Unicode that cater to a global and multilingual user base. Understanding these encoding systems is essential for anyone working in computer science, programming, and digital communication.

Have questions or comments? Continue the conversation on TechForum, DigiKey's online community and technical resource.