The Five Senses of Sensors—Part II: Touch and Vision

投稿人:电子产品

2015-04-02

In this, the concluding article of a two-part series updating the “Five Senses of Sensors” articles published in TechZone in 2011 (Sound, Vision, Taste, Smell, and Touch), we will look at advances regarding touch and vision. As in Part 1, which examined smell, taste, and hearing, we will focus on improvements in sensor technology and the impact of Internet of Things (IoT) applications.

The sense of touch

Of the five types of sensors mimicking human senses, consumers typically use touch sensors most often throughout each day, particularly while operating smartphones and tablets. Touch sensors are simply transducers that are sensitive to force pressure transmitted via human touch.

According to IBM, within the next few years, users will be able to “touch” retail goods through their mobile devices. Shoppers will “feel” fabric texture and weave, brushing their finger over the item’s image on a screen. It takes little imagination to see the medical segment running with this capability for diagnostics.

There are several varieties of touch sensor: capacitive, resistive, infrared, and surface-acoustic-wave (SAW) sensors. Each of the touch-sensor technologies detects touch differently, and some can detect gestures or proximity without direct contact.

Capacitive sensing is based on electrical disturbance. Capacitive touch sensors consist of sheets of glass with a conductive coating on one side and a printed circuit pattern around the outside of the viewing area. The printed circuit pattern creates a charge across the surface and is disturbed by a user touching the screen with a finger, glove, stylus, or any other object.

Capacitive sensors are built on the glass itself. Contact does not require much pressure, so force is insignificant. However, capacitive touch sensors are vulnerable to abrasion of the conductive coating.

A resistive touch sensor, in comparison to a capacitive touch sensor, consists of two layers not in contact with each other but separated by thin spaces. The sensor responds when pressure causes the layers to touch. The sensor consists of an outside flexible layer that is coated on the inside with a conductor, a non-conductive separator of mica or silica, and an inside supporting layer of glass, which is also coated with a conductor.

The first iPhone delivered capacitive touch capabilities in 2007—its capacitive rather than resistive touchscreen enabled multi-touch gestures such as taps and pinching – and further advances have followed.

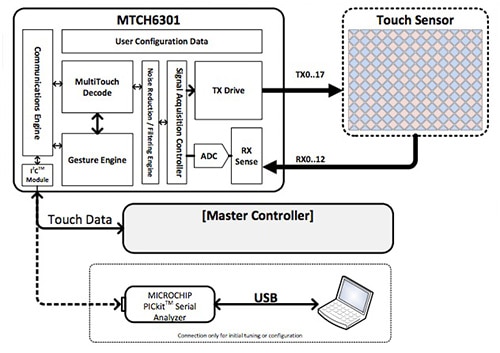

Today, the integration of multi-touch and gestures for a rich interface has become easier. Take, for example, the Microchip MTCH6301 capacitive touch controller (Figure 1). The MTCH6301 is a turnkey-projected capacitive controller. Through a sophisticated combination of self- and mutual-capacitive scanning for both XY screens and touch pads, the MTCH6301 allows designers to quickly and easily integrate projected capacitive touch into such applications as:

- Human-machine interfaces (HMIs) with configurable button, keypad, or scrolling functions

- Single-finger gesture-based interfaces to swipe, scroll, or double tap controls

- Home automation and security control panels

- Automotive center stack controls

Figure 1: The MTCH6301 block diagram.

While the device will work out-of-the-box using this specific sensor configuration, most applications will require additional configuration and sensor tuning to determine the correct set of parameters to be used in the final application. Microchip provides a PC-based configuration tool for this purpose, available in its mTouch Sensing Solution Design Center.

Most recently, display technology has incorporated touch. In the past, adding touch sensors meant layers in a laminated panel stack-up. Now touch sensors are integrated into the display and touch controller and display driver functions are housed in a single IC. The result is that the sensor and controllers are enhanced and can deliver touch wake-up gestures, pens that support down to a 1 mm tip, concurrent finger and pen operation, proximity and finger hover, and glove and fingernail support. The technology also works in humid or otherwise moist environments—conditions that previously were problematic.

Another type of sensor in this category, the IR proximity sensor, works by applying a voltage to a pair of IR light-emitting diodes (LEDs) which in turn, emit infrared light. This light propagates through the air and once it hits an object it is reflected back towards the sensor. If the object is close, the reflected light will be stronger than if the object is further away.

For example the Silicon Labs Si1145/46/47 (Figure 2) is a low-power, reflectance-based, infrared-proximity, ultraviolet (UV) index, and ambient-light sensor with I2C digital interface and programmable event-interrupt output. This touchless sensor IC features an analog-to-digital converter, integrated, high-sensitivity visible and infrared photodiodes, a digital-signal processor, and one, two, or three integrated infrared LED drivers with fifteen selectable drive levels. The Si1145/46/47 offers excellent performance under a wide dynamic range and a variety of light sources from direct sunlight to dark glass covers. Using two or more LEDs, the device is capable of supporting multiple-axis proximity motion detection. Applications include handsets, e-book readers, notebooks and netbooks, portable consumer electronics, audio products, tamper-detection ICs and security panels, industrial automation, and more.

Figure 2: The Si1147 Proximity/IR/Ambient Light Sensor.

To promote fast prototyping and development, Silicon Labs just introduced a Zero Gecko Starter Kit with sensor expansion board (Figure 3) that is fully supported with Simplicity Studio, which features precompiled demos, application notes, and examples. The kit includes the Si1147 proximity sensor, as well as an Si7013 humidity and temperature sensor, an EFM32ZG-STK3200 Zero Gecko, and all IR LEDs and optomechanical components necessary for development of gesture and proximity applications.

Figure 3: The Zero Gecko Starter Kit Board.

The sense of vision

Robust yet easy-to-use, self-contained vision sensors can now perform automated inspections that previously required costly and complex vision systems; and sooner than you think, computers armed with sufficient artificial intelligence will be trained to identify color distribution, texture patterns, edge information, and motion information on video clips.

Consider, for example, an online library of information called Robo Brain¹ that computer-vision researchers can access to give their robots “sight.” According to Kurzweil Accelerating Intelligence,² it is currently downloading and processing about 1 billion images, 120,000 YouTube videos, and 100 million how-to documents and appliance manuals, all being translated and stored in a robot friendly format.

Robo Brain processes images, picks out objects, and by connecting images and video with text, it learns to recognize objects and how they are used. The library will allow robots to make the best choices based on data-fed 3D knowledge graphs.

Kurzweil is not alone in this pursuit. Microsoft’s Project Adam also features a computer-vision program that can tell the difference between a picture of a Pembroke and a Cardigan Welsh Corgi dog. Project Adam’s algorithms have seen over 14 million images split up into 22,000 categories drawn from an ImageNet database.³

3-D imaging systems are also now able to provide vision capabilities. An example can be seen with Google’s Project Tango. Project Tango smartphones and tablets will be equipped with cameras and depth sensors to accurately and quickly map out environments – including building interiors, so it can be used by Google to add this information to Google Maps. Other potential uses include helping the blind or visually impaired “see” by mapping an area out and providing audio cues as to the layout. Advances resulting from Project Tango also are expected to assist in the development of Google’s self-driving cars, to give them a better awareness of their surroundings.

By replacing proximity sensors with machine vision, productivity can be improved. Proximity sensors yield only limited information as to whether or not a part is present, while machine vision provides dramatically more. However, if machine vision is to become “mainstream,” more affordable imager sensors and accompanying lenses are needed to provide continuing improvements.

For example, the NOIV2SN1300AVITA 1300 1.3 Megapixel 150 FPS Global Shutter CMOS Image Sensor from ON Semiconductor targets such applications as machine vision and motion monitoring. The VITA 1300 is a ½-inch Super-eXtended Graphics Array (SXGA) CMOS image sensor with a pixel array of 1280 x 1024. Its high-sensitivity 4.8 mm x 4.8 mm pixels support pipelined and triggered global shutter-readout modes and can be operated in a low-noise rolling shutter mode. The sensor has on-chip programmable gain amplifiers and 10-bit A/D converters. The integration time and gain parameters can be reconfigured without any visible image artifact.

A high level of programmability enables the user to read out specific regions of interest. Up to eight regions can be programmed for even higher frame rates. The image data interface of the V1-SN/SE part consists of four LVDS lanes, facilitating frame rates up to 150 frames per second. Each channel runs at 620 Mbps. In addition to individual devices, there are opportunities for designers to speed their learning curve when implementing vision. For example, the 80-000492 MityDSP Vision Development Kit (VDK) from Critical Link is a complete hardware and software framework designed to accelerate the development of vision applications. The framework offers both a Xilinx Spartan 6 FPGA and a TI OMAP-L138 dual-core processor, and is based on Critical Link’s production-ready MityDSP-L138F System-on-Module (SoM).

Today’s high-speed networking is responsible for remote access to machine vision solutions rapidly taking hold. Images broadcast on HMIs allow machine operators to view what a remote camera is “seeing” so the operator has greater visibility to processes in multiple locations, at once improving both safety and productivity.

For more information on the parts discussed in this article, use the links provided to access product information pages on the DigiKey website.

References

免责声明:各个作者和/或论坛参与者在本网站发表的观点、看法和意见不代表 DigiKey 的观点、看法和意见,也不代表 DigiKey 官方政策。